AI, APIs, metadata, and data: the digital knowledge and machine intelligence ecosystem

Without a doubt, generative artificial intelligence (AI) has seized the global spotlight this year. As highlighted in Postman Co-Founder and CEO Abhinav Asthana’s recent blog post, artificial intelligence and generative models will fundamentally transform how we develop and interface with software. AI systems and bots will become deeply integrated into our workflows and user experiences, with APIs serving as the critical link enabling these AI agents.

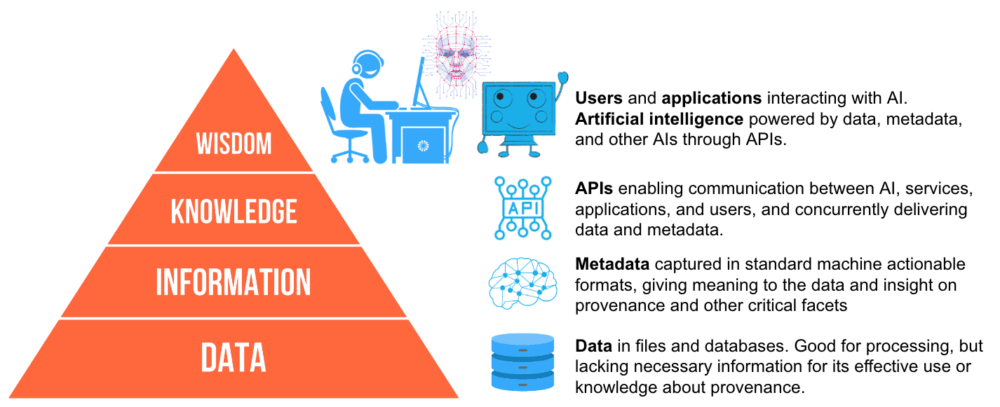

We are at a crucial juncture where we can’t lose sight that AI is an end product of an ecosystem that includes APIs, metadata, and data. AI cannot exist without this supporting cast that truly powers its performance. Together, these components form the backbone of our digital world.

A parallel can be drawn between the Data-Information-Knowledge-Wisdom (DIKW) pyramid, a model that represents the transition from raw data to valuable wisdom, and our ecosystem, illustrating the interplay between its four integral components:

The Postman Open Technologies team is excited to stay at the forefront of these constantly evolving fields, with a strong focus on AI. We continually explore related topics, helping to ensure the Postman API Platform remains responsive to the dynamic needs of developers, researchers, and the open data and AI communities. A prime example is the recent release of Postbot, our AI assistant. Our goal is to empower enterprises to effectively meet customer demands, driving innovation and fostering growth in the digital landscape.

APIs: the connective tissue of the digital ecosystem

Artificial intelligence is intrinsically bound to APIs. Whether it’s through users interacting with AI via web interfaces or applications, the utilization of frameworks like LangChain, or the development of custom applications or plugins, APIs are the unseen yet essential facilitators in the background. They serve as the communication channels between AIs, traditional services, applications, and humans.

Moreover, APIs play a pivotal role in ensuring security by establishing secure pathways for data exchange, or safeguarding the integrity and confidentiality of information.

When thinking of APIs for AI, it’s important to consider the full set of specifications available to us, such as OpenAPI, AsyncAPI, or GraphQL. SPARQL is also likely to play a critical role as a doorway to semantic web frameworks and standards such as RDF, SKOS, or OWL, offering powerful mechanisms for representing knowledge or describing data.

As an industry leader, Postman delivers a comprehensive solution for designing, implementing, and managing APIs. It streamlines the process of API development and management, thereby playing a crucial role in the seamless integration and operation of AI systems.

Data: the commodity that powers AI

AI is fundamentally dependent on data. It is the fuel that powers models, and machine learning algorithms, enabling them to learn, adapt, and evolve. The process of training is the bedrock of machine intelligence. It involves feeding data into an AI model, allowing it to learn patterns, make predictions, and, ultimately, make decisions.

The quality and relevance of the data are paramount. High-quality data ensures that the AI model can make accurate and reliable predictions. It’s not just about quantity; it’s about having the right data. This data must be accompanied by relevant metadata, which provides context and makes the data understandable and usable.

AI is also not just a consumer of data, but also a producer. As AI processes and analyzes data, it generates new knowledge, a valuable resource that must, as such, be properly managed. It can provide insights into the AI’s decision-making process, improve the transparency of the AI system, and even be used to further train and refine the AI model. In this way, the relationship between AI and data is a symbiotic one, each feeding into and enhancing the other.

Ensuring data is discoverable and accessible over APIs is essential to the ecosystem, a mission that the Postman API Platform supports. This can be achieved through the modernization of data infrastructures and services, collaborating with data custodians and users, and fostering the adoption of standards and best practices.

Metadata: the meaning of the data

Metadata is the key that unlocks the meaning and potential of data. Without metadata, data can be an indecipherable jumble. Metadata makes data understandable and usable by both humans and AIs.

From a transparency perspective, the provenance of data used to train AI models is of paramount importance. Knowing where the data comes from helps to establish AI authenticity and reliability, and understand potential issues surrounding accuracy, bias, or licensing.

The metadata challenge is not new and is an issue that the data producers, researchers, and scientific communities have been tackling for decades. Several standards and best practices have emerged, with the FAIR initiative and Data on the Web Best Practices providing good entry points into the matter.

However, many organizations struggle to implement these standards effectively due to factors such as lack of awareness and resources, limited technology expertise, or complexity of the standards. Surrounding these best practices with APIs and leveraging AI to address some of these challenges would go a long way in improving upon the metadata weaknesses.

There’s a pressing need to pay more attention to this area, to strengthen data management and ensure the integrity, effectiveness, and transparency of data and AI. And it’s another area where Postman, as an industry leader, plays a critical role in facilitating the development of standards-based APIs and connecting the dots.

AI for better (meta)data

Interestingly, while AI in our ecosystem is pictured as an end product, it also holds great potential to enhance the quality of data and metadata, essentially closing the loop between the two. AI can be leveraged to analyze vast amounts of data, identify patterns, and infer metadata, thereby enriching the data and making it more useful for further use and analysis.

AI’s role in metadata inference is particularly noteworthy. By analyzing the data, AI can generate insightful knowledge about the data that provides additional context, making the data more understandable and usable. This can be particularly useful in scenarios where the original metadata is missing or incomplete.

Quality assurance is another area where AI can make a significant impact. AI algorithms can be used to identify errors or inconsistencies in the data, ensuring that the data is accurate and reliable. This is crucial for any data-driven decision-making process, including AI training.

Lastly, AI can be used to generate synthetic data, which can be invaluable both for researchers and for training AI models. Synthetic data can mimic the characteristics of real data, without any of the privacy concerns associated with using real-world data. This can greatly expand the possibilities for analysis and AI training, enabling more robust and diverse models and empowering innovation.

Human collaboration will lead to AI success

AI is revolutionizing our societies and will forever change the way we live. It, however, cannot be looked at in isolation. For our AI dream and vision to become reality—and to ensure privacy—we need AI, APIs, metadata, and data to coexist and evolve in harmony.

To achieve this, we must foster collaborative efforts across all four components. I believe the success of machine intelligence ultimately resides in communities and stakeholders coming together, which can be supported through Postman’s collaborative environment, contributions to open source projects, and participation in various initiatives surrounding standards and best practices.

What do you think about this topic? Tell us in a comment below.