Postbot: a look inside Postman’s AI assistant

Last month, we announced Postbot for enterprises at POST/CON 24, our annual user conference. This release brings enterprise-grade privacy and availability to Postbot, Postman’s AI assistant, which supercharges API development and management.

Behind the scenes, we also made a number of updates to Postbot’s architecture—all to better support our users and set the stage for what’s to come. In this post, we’ll discuss some of the technical decisions and patterns we employed while creating Postbot.

The first iteration of Postbot

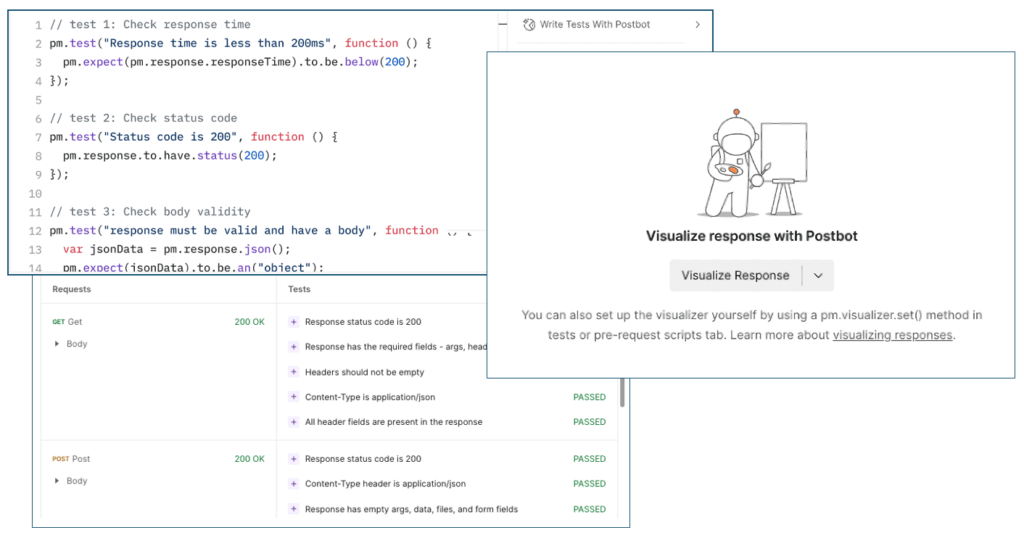

The very first version of Postbot, released in May 2023, could add basic Postman tests and write FQL queries. The next major update came soon after in July, when we rolled out Postbot with natural language support and new features (like generating test suites for collections). This version of Postbot could identify user intents and map them to relevant in-app tasks, such as creating test scripts and visualizing API responses.

While these were powerful AI features powered by Postbot, they were disconnected actions within the Postman app and it didn’t feel like you were talking to “one expert AI assistant.” As the set of capabilities grew, having multiple entry points quickly became untenable. Users needed help knowing where to find Postbot and what they could do with it. This also coincided with the rise of chat as a popular way to interact with AI-enabled systems.

The first chat interface

To overcome these limitations, we updated Postbot to always be accessible via the status bar and to support a chat interface. This enabled Postbot to be aware of a user’s context; it could respond according to the tab that was currently open, for example.

A chat interface provided a number of long-term advantages for Postbot. Users no longer had to know what feature Postbot supported and where to find it. Postbot could also ask for clarifications or proactively prompt the user if it “felt” it could offer assistance.

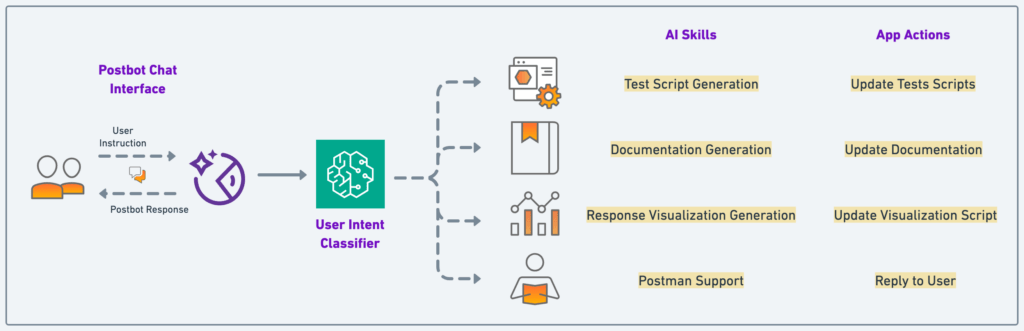

This workflow was supported by a simple routing framework, as shown below. This framework comprises three components: “user intent classifier,” “skills,” and “actions”:

User intent classifier

We used a deterministic neural text classification model to map a user’s natural language instruction to a finite set of intents, which loosely corresponded to various “skills” that Postbot supported. The intent classification model was based on an encoder-only language model that was fine-tuned with a curated dataset of user-intents that consisted of {instruction, intent label} pairs. The model and its dataset were developed and maintained using the Hugging Face platform, and the model was deployed at scale with AWS SageMaker.

We evaluated the intent classification model with standard classification metrics—accuracy, precision, and recall. In our experiments, we also benchmarked a diverse set of base language models like DeBERTa-v3 by Microsoft, DistilBERT by Hugging Face, and BART by Meta AI against a human performance baseline (inter-annotator agreement). Every in-production user intent classification model significantly beat this baseline. After it was fine-tuned, the intent classification model boasted an accuracy of over 95.82%, with accuracies for certain intents even touching 99%.

Skills

Postbot’s skills leveraged GenAI models to generate content for a particular user intent (test scripts, documentation, etc.). Each skill was implemented as a workflow that included gathering the relevant data, preprocessing inputs, running LLM chains, and postprocessing outputs if needed. The LLM chains comprised prompts that were annotated with live contextual app data and certain domain-specific information. Separating workflows for skills allowed us to create custom LLM chains and model configurations.

Actions

At this point, all of Postbot’s features involved content generation using GenAI and insertion into the Postman app. For example, Postbot could update test scripts in the request’s test script editor and refresh test results. Other features could update the request’s description. We codified this finite set of in-app effects as “actions.”

Actions became part of the internal Postbot protocol—they’re how the Postbot system instructs Postman to execute tasks on the user’s behalf. Whenever Postman receives an action from the Postbot system, it can update the UI in a certain way to automate tasks or assist the user. Future actions could involve more complex tasks in Postman, such as navigating to a specific request in a different workspace.

This architecture enabled a single-instruction, single-action usage model, and the natural language interface let users customize their instructions. For example:

“Visualize the coordinates array from the API response on a world map”

“Add performance and security tests to this request”

“Document the payload and response of this request with their JSON Schema”

Postbot as a conversational agent

As we saw more usage and received feedback, more user needs and expectations became clear. Many users were trying to delegate more complex tasks that a single call of Postbot “action” couldn’t solve. We also saw instances where the need for Postbot to be aware of its user-facing persona was highlighted.

These were signals that Postbot needed to evolve into a conversational agent that had a certain level of autonomy. Specifically, we wanted to ensure the system could give us the following:

- Memory and continuity: Interactions with Postbot shouldn’t consist of unrelated messages grouped together but rather a continuous multi-turn conversation where the user can gradually build up the context and provide feedback on the actions, and Postbot can ask for clarifications on the user’s intent.

- Persona awareness: Postbot should be able to understand its own scope of usage, capabilities, and limitations and interact with the user accordingly. It should also support interactions that aren’t necessarily tied to app actions, such as greetings or discussing broader topics around API development.

- Multi-action execution: Most complex tasks require the interplay of different skills and actions. For example, Postbot might need to look up API documentation first and then add relevant testing code for it. Some other tasks might require Postbot to update multiple requests in a collection. In such situations, Postbot should be able to execute multiple actions in a single interaction, showcasing a higher degree of AI-powered autonomy.

Postbot agent architecture

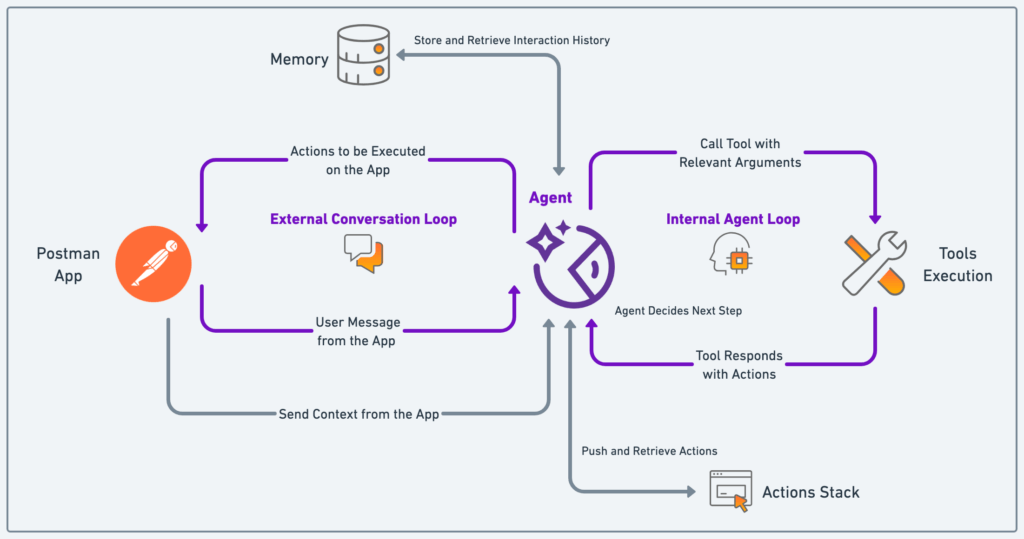

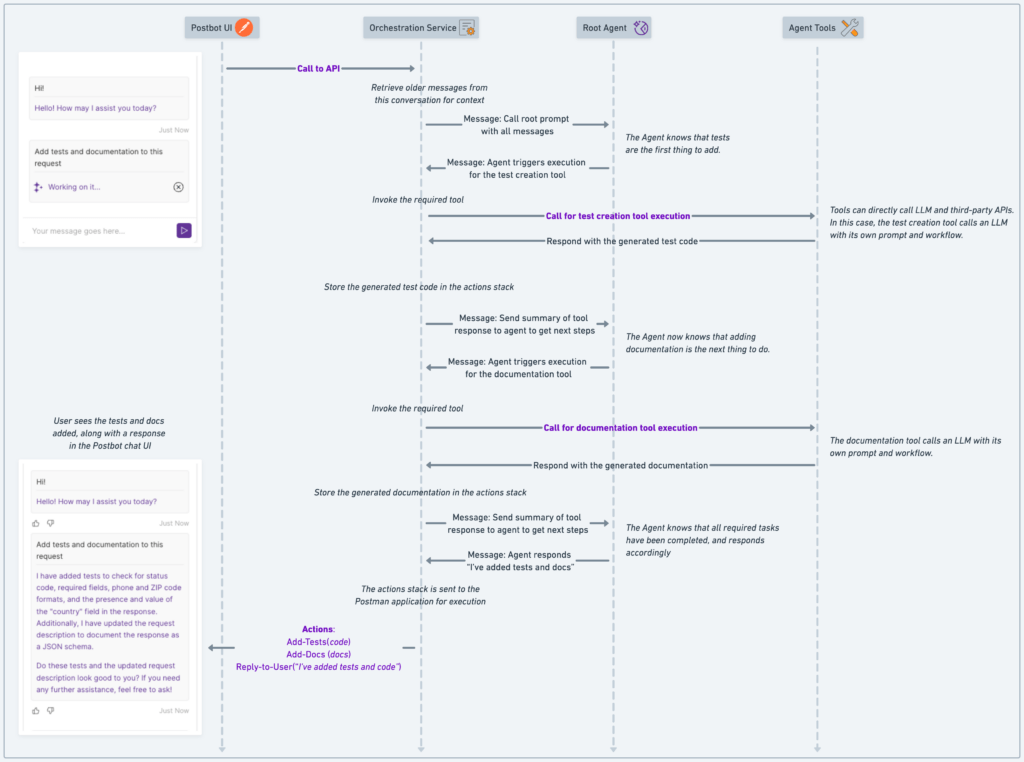

In contrast to the linear routing framework of the previous version of Postbot, the agentic version needed two high-level loops—the ‘”external conversation loop” for better interaction with the user, and the “internal agent loop” for more autonomy. To implement these two loops reliably at scale, we engineered Postbot’s agent with custom orchestration layers. The “user intent classifier,” “skills,” and “actions” were abstracted into the “root agent,” “agent tools,” and “actions,” respectively, as depicted below:

Root agent

The root agent layer runs conversations in natural language with the user (depicted by the external conversation loop) and understands user intent and system states. This layer provides memory, conversation continuity, and support for multi-action execution.

We use off-the-shelf chat-based LLMs with tool usage capability (also known as function-calling) to model the Root Agent. The agent LLM either responds to the user or triggers the execution of the right set of tools and actions via its function-calling capabilities (depicted by the internal agent loop). The agent LLM is initialized with a system prompt that sets up its interaction profile, the scope of usage, limitations, etc., for its persona awareness. We explored chat LLMs from various providers like OpenAI, Anthropic, and Mistral AI to model the Root Agent.

An orchestration layer supports the agent by restricting its capabilities to a finite space of states and actions. This allows us to control the agent’s order of autonomy, ensuring its reliability, interpretability, and safety in actual production deployments.

Agent tools and actions

The agent tools are the workhorse of Postbot. Similar to the skills described previously, they allow the agent to generate content or make requests to third-party APIs, and wrap the output under structured “actions.” However, unlike skills, tools do not execute in a linear fashion. Instead, their outputs are stored in an action stack for multi-action execution. This enables two new key capabilities in Postbot: the ability to complete complex tasks for users, and the internal usage of tools to better assist the user. For example, while the older “Postman Support” skill only allowed Postbot to answer users’ questions around Postman, the newer “Postman Support” tool can even be invoked by Postbot to answer its own questions around Postman (without any explicit prompting from the user) to respond to a different query from the user.

Each tool is represented in a format compatible with the underlying LLM’s tool usage API (e.g., JSON Schema for OpenAI’s function calling). Most tools need input context obtained from the user’s app or populated by the agent during tool identification. For example, a user’s question about Postman can be converted to a keyword-based query by the agent and passed as an argument to the Support tool.

The actions defined previously were largely ported to the agent architecture as is. We also introduced a new action to let the agent seek user intervention or ask for extra information. For example, if a selected tool requires additional information from the user’s app, this can be fetched without involving the user. This eliminates the need for all possible app context to be sent to the agent in each call.

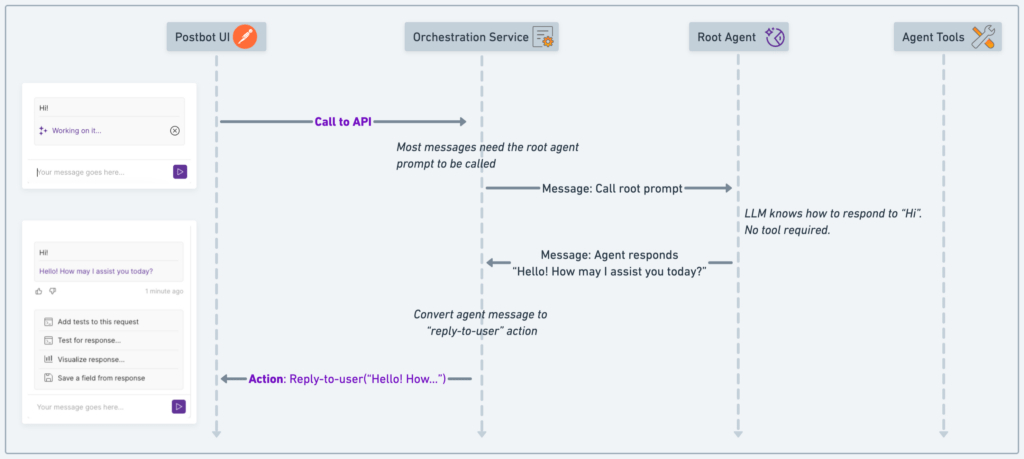

Interactions

The Postbot agent is defined by our custom orchestration that controls its behavior. The fundamental unit of this orchestration is what we call an “interaction.” Any data exchange between our custom orchestration service, the root agent, and the agent tools is considered an interaction. We have four primary interactions:

- A user’s message to Postbot

- The agent’s response to the user (comprising text responses or actions)

- The agent calling a tool

- The tool responding to the agent

All interactions are persisted to enable multiple runs of the “external conversation loop.” It is the controlled execution of these interactions that allows us to model the autonomy and capabilities of the agent. Here’s what a simple set of interactions might look like:

This requires no tools to be used since the user query is simple enough for the root agent to handle on its own. However, a more complex user query might involve the use of one or more tools:

Why custom orchestration?

Agentic AI assistants have been the talk of the town for a while now. However, it is a widely accepted fact that deploying them at scale is challenging. Our custom orchestration for the Postbot agent architecture solves some of these challenges.

Controlled agency

The agency of an AI assistant ranges from zero autonomy (e.g., LangChain chains) to complete autonomy (e.g., plan and execute agents like AutoGPT). A total lack of autonomy highly limits the assistant’s ability to execute complex multi-step tasks. On the other hand, complete autonomy is often unreliable and inaccurate in real-world scenarios. Our problem statement called for a smart tradeoff between the two. While frameworks like Microsoft’s AutoGen allow a certain level of control over the agent, it is usually delegated to a system of non-deterministic LLMs and lacks customization.

Fine-grained execution control

While many existing frameworks and services (like the OpenAI Assistants API and AWS Bedrock Agents) offer managed persistence for conversational AI agents, we opted for self-hosted persistence for agent conversations and actions. This gave us many benefits, like multiplexing across different LLM providers and models, even within a single interaction with the user. Even the set of tools the agent has access to can be customized at every step. For example, if a user has read-only access to the Postman workspace they’re in, we can limit the agent’s tools to those that don’t involve any update actions.

This decision also gave us multiple opportunities for optimization. The Postbot UI lets users express their intents via chat or via fixed call-to-actions like suggestions or buttons in specific workflows. The latter allows us to bypass the tool identification step call for the agent, increasing Postbot’s perceived performance.

The agent also allows handling some user requests without using an LLM. For instance, certain user requests can be fulfilled merely by tweaking a configuration on the Postman app. By injecting this interaction directly into our persistence layer, the agent “thinks” it solved the problem and maintains the conversation flow in future interactions.

Modularity and extensibility

The Postbot agent architecture is designed to be modular and easily extensible. Its interaction-based framework allows it to be easily extended to a multi-agent system, where one or more sub-agents with a similar architecture can be independently developed and interfaced with the root agent. Similarly, the tools-and-actions framework allows easy integrations with third-party services.

What’s next?

These benefits of custom orchestration open up several possibilities down the road. As tools get richer, some will need to evolve into their own agents, producing a tiered, multi-agent architecture. A simple set of interactions ensures that this multi-agent system is equally easy to reason about. The set of actions that Postbot supports is also likely to expand. Limiting the number of choices each agent or tool needs to make prevents a larger set of actions from degrading output quality. Another important part of bringing AI to users is an experience built for AI. Try this thought experiment: what would common UX patterns look like if they were conceptualized today (with LLMs available)? That’s an area we’re excited to explore!

We hope that what we learned while building Postbot has been useful to you. And as we continue to make advancements, we’ll continue to share updates and product announcements. If you haven’t already, get started with Postbot today!

Loving the transparency and insights shared! Great work at building and distilling the learnings down so everyone can benefit 🙌

I love how well you explain this. Also, this is one of the greatest AI tools I’ve used. It understands how to interact and work with my project.

Keep up the good work, Postman!