What is gRPC?

gRPC is a schema-driven framework that facilitates service-to-service communication in distributed environments. It is a language-agnostic implementation of the RPC (Remote Procedure Call) protocol that supports streaming and strongly typed service contracts through its use of HTTP/2 and Protocol Buffers (Protobuf).

Here, we’ll explore the history of gRPC, explain how it works, and compare it to REST. We’ll also discuss the key benefits, challenges, and use cases for gRPC. Finally, we’ll highlight how the Postman API Platform makes it easier to design, develop, and debug gRPC APIs.

What is the history of gRPC?

The “RPC” in gRPC stands for “Remote Procedure Call.” RPC was first introduced in the late 1970s and 1980s, and it allows clients and servers to interact with one another as if they were both on the same machine.

gRPC is an implementation of RPC that was developed and open-sourced by Google in 2015. It was initially conceived as the next generation of their existing RPC infrastructure, called “Stubby,” which they had used since 2001 to connect a large number of microservices that were running across many data centers. It is now part of the Cloud Native Computing Foundation (CNCF).

gRPC modernizes and streamlines the way remote procedure calls are made. For instance, its use of Protocol Buffers (Protobuf) as its interface definition language (IDL) provides strong typing and facilitates code generation in multiple languages. Additionally, its use of HTTP/2 for transport improves network efficiency and supports real-time communication.

How does gRPC work?

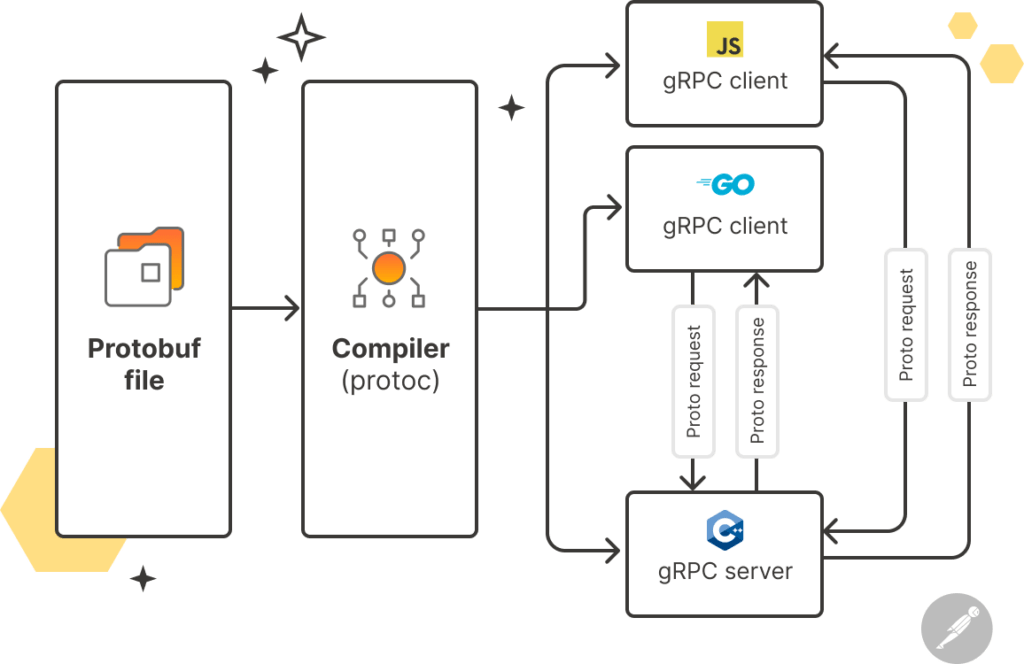

gRPC enables distributed, polyglot services to communicate efficiently over a network. This process starts with developers defining service contracts and data structures in Protobuf (.proto) files. These files contain information about the service methods, message types, and structure of the API (we’ll explore Protobuf in more detail later in this article).

Next, these .proto files are processed by the Protocol Buffers compiler (known as “protoc”), which involves generating client and server code in various programming languages. gRPC’s language-agnostic approach makes it well-suited for distributed systems and microservice-based architectures, in which services running on different nodes may have different runtimes.

The code that is generated by protoc includes classes or modules that abstract away much of the complexity of making gRPC calls. For clients, the generated code typically includes method stubs that correspond to the RPC methods that are defined in the .proto file. These stubs handle serializing data, making the gRPC call over the network, and deserializing the response. For servers, the generated code provides a base class that developers can implement to define the service’s behavior. Developers can also override these methods to create custom server logic.

There are four method types in gRPC—unary, server streaming, client streaming, and bidirectional streaming. These communication patterns are made possible by gRPC’s use of HTTP/2 as the underlying transport protocol, which enables multiple data streams to efficiently share a single communication channel or connection. Bidirectional streaming support is a key differentiator of gRPC, as it unlocks important use cases such as real-time chat applications and IoT systems.

What is Protobuf?

gRPC uses Protocol Buffers (Protobuf) as its interface definition language (IDL), which is one of its primary enhancements of RPC. Protobuf is a flexible and efficient method for serializing structured data into a binary format. Data that is encoded in a binary form is more space-efficient and faster to serialize and deserialize than text-based formats like JSON or XML.

Protobuf has several advantages that go beyond binary data serialization. For instance, its ability to generate code reduces development overhead and makes it easier to work with serialized data in various programming languages. Protobuf also allows you to add new fields to data structures without disrupting existing code—and promotes strong typing, which ensures data consistency and reduces the risk of error.

What are the four service methods defined by gRPC?

gRPC defines four primary service methods that are used for remote procedure calls (RPCs) between clients and servers. These methods represent the basic communication patterns between clients and servers:

- Unary RPC: In a unary RPC, the client sends a single request to the server and waits for a single response. This one-to-one communication pattern is the simplest form of RPC, and it is similar to traditional HTTP requests.

- Server streaming RPC: In a server streaming RPC, the client sends a single request to the server and receives a stream of responses in return.

- Client streaming RPC: In a client streaming RPC, the client sends a stream of requests to the server and waits for a single response. This method is useful when the client needs to send a series of data to the server, and the server responds after processing the entire stream of requests.

- Bidirectional streaming RPC: In a bidirectional streaming RPC, both the client and the server can send a stream of messages to each other concurrently. This enables real-time communication between the client and server, with the ability to send and receive messages as the need arises.

What is the difference between gRPC and REST?

Related: gRPC vs. REST

gRPC and REST are two different architectural styles for building APIs. They both use HTTP to enable clients and servers to exchange data, and both can be implemented with a wide range of programming languages.

However, gRPC and REST employ very different communication models. With REST, clients use a standardized set of HTTP methods to request resources from a server via their corresponding API endpoints. The data that is exchanged through REST APIs is typically formatted in JSON, which is highly flexible and readable by both humans and machines.

In contrast, a gRPC client operates by calling functions on the server as if they were local functions. gRPC clients can send one or many requests to the server, and the server may send one or many responses back. Additionally, gRPC uses Protobuf to define the data structure—and then serializes the data into binary format.

REST is the most popular architectural style for building APIs. Its standardized interface makes it easy for developers to learn and implement, and it is most commonly used to create web applications. gRPC, in contrast, was designed to support highly performant microservice architectures that may require real-time, bidirectional data transfer.

What are the benefits of gRPC?

gRPC offers many benefits that make it a compelling choice for building efficient and highly performant APIs in modern distributed systems. These benefits include:

- Efficiency: Protobuf serializes data into a binary format, which reduces data transfer size and processing time—especially in comparison to text-based formats like JSON.

- Language-agnosticism: gRPC allows developers to automatically generate client and server code in multiple programming languages, which facilitates interoperability across diverse technology stacks.

- Strong typing: Protobuf enforces strong typing, which provides a well-defined and validated structure for data. This reduces the likelihood of errors and mismatches when data is exchanged between services.

- Extensibility: With gRPC, you can add new fields or methods to your services and messages without breaking existing client code. This enables teams to evolve and extend APIs without forcing clients to update immediately, which is especially important in distributed systems.

- Code generation: Protobuf’s compiler generates client and server code from .proto files, which reduces manual coding effort and helps ensure consistency and accuracy.

- Support for interceptors and middleware: gRPC enables developers to implement authentication, logging, and monitoring with interceptors and middleware. This helps ensure that gRPC services remain clean and focused on business logic—and that essential functionalities are introduced consistently across the entire service infrastructure.

What are the primary use cases of gRPC?

gRPC is a versatile framework that is well-suited for situations in which efficient, cross-platform communication, real-time data exchange, and high-performance networking are essential. Its primary use cases include:

- Microservice architectures: In microservice-based architectures, individual services may be developed in distinct programming languages to suit their specific needs. Additionally, numerous tasks may need to be carried out simultaneously, and services can face varying workloads. gRPC’s language-agnostic approach, together with its support for concurrent requests, makes it a good fit for these scenarios.

- Streaming applications: gRPC’s support for multiple streaming patterns enables services to share and process data as soon as it becomes available—without the overhead of repeatedly establishing new connections. This makes it a good fit for real-time chat and video applications, online gaming applications, financial trading platforms, and live data feeds.

- IoT systems: Internet-of-Things (IoT) systems connect an enormous number of devices that continuously exchange data. gRPC’s low latency, together with its support for real-time data ingestion, processing, and analysis, make it a good fit for these environments.

What are some challenges of working with gRPC?

While gRPC offers numerous advantages, it also poses some unique challenges. These challenges include:

- The complexity of Protobuf: Protobuf is very efficient and powerful, but defining message structures and service contracts with .proto files can be more challenging than with text-based formats like JSON.

- A steep learning curve: It can take time for developers of all skill levels to understand the intricacies of Protobuf, HTTP/2, strong typing, and code generation.

- Difficult debugging workflows: Binary serialization is not human-readable, which can make debugging and manual inspection more challenging when compared to JSON or XML.

- A less mature ecosystem: While gRPC has gained significant popularity, it does not have as extensive an ecosystem and community as some older technologies. As a result, finding gRPC libraries or tools may be more difficult.

How can Postman help you work with gRPC?

Postman’s gRPC client addresses the challenges of working with gRPC APIs, enabling teams to build sophisticated service architectures with ease. With Postman, you can:

- Send requests with all four gRPC method types: Postman users can invoke unary, client-streaming, server-streaming, and bidirectional-streaming methods from an intuitive user interface.

- Get message hints: Postman enables users to compose and send gRPC messages quickly with the help of autocomplete hints, which are based on the service definition. Users can also generate an example message in a single click.

- Create a service definition from scratch: Postman’s API Builder allows you to create a Protobuf service definition from within the Postman API Platform. You can then use this service definition as the single source of truth for your entire API project.

- Save your multi-file Protobuf APIs in Postman’s cloud: With Postman, users can easily import their gRPC service definitions—and save all relevant .proto files as a single API. This allows teams to organize even their most complex gRPC APIs within Postman, facilitating reusability and collaboration.

- Automatically access available services and methods: Postman includes support for gRPC server reflection, which enables users to send gRPC requests without manually uploading a .proto file or creating a schema.

- Eliminate context switching: With Postman, users can view gRPC responses directly alongside the request data and documentation. This streamlines workflows by eliminating the need to switch back and forth between windows and tools.

- Get full visibility into every gRPC connection: Postman users can see a unified timeline of all events that occur over a gRPC streaming connection. They can also filter noisy streams to isolate specific message types, which streamlines the debugging process.

What do you think about this topic? Tell us in a comment below.