Travelogue of Postman Collection Format v2

Collections are the way we classify and organize API services within the Postman client app. Soon enough, collections outgrew their original home and made their way to a myriad of places such as build systems, continuous integration systems, monitoring servers and what not!

The key ingredients that facilitated this are Newman and the Postman Collection Format. Together they were able to recreate the magic of Postman outside of the client, into the realms of the command-line!

What does the Postman Collection Format achieve?

If you have ever opened the file that is created when you export a collection from within the Postman app, you’d notice that it actually is a JSON file. The file contains all data (and metadata) that is required by Postman to recreate the collection when imported back into Postman. The same file is utilized by Newman to run the collection from command-line.

This file (or the URL generated when collection is uploaded from app) enabled a number of distributed teams to collaborate on the development and integration of web services. Instead of sharing complex API documentation, developers could now share collections that exactly outline the web services (along with documentation and tests.)

Going forward, it did not take long for many to create collections outside of Newman; within their build systems; and feed them to Newman to execute tests. Down the line, collections run at regular intervals and exporting the results to an aggregator provided ways to monitor services and perform continuous performance analyses. The collection format also enabled a few to act as an intermediate service that connects two or more web services.

To summarize, the collection format allows ease of use through all stages of workflow around APIs.

What does the present v1 Postman Collection Format look like?

The following is a collection with two requests contained within a folder. The two requests outline how to create and delete JSON files on jsonblob.com.

{

"id": "6fd141b6-1ef7-ea36-6fb8-917f5d4e938b",

"name": "JSONBlob Core API",

"folders": [{

"id": "76e8a3f4-4b8e-781f-2c1b-76a66b769158",

"name": "JSON API Services",

"collection": "6fd141b6-1ef7-ea36-6fb8-917f5d4e938b",

"order": [

"1bc7841d-ba1a-3784-25eb-9c40adeefb40",

"59665941-3886-0622-fe29-6d4d4b02e4ee"

]

}],

"requests": [

{

"id": "1bc7841d-ba1a-3784-25eb-9c40adeefb40",

"name": "Create a Blob",

"dataMode": "raw",

"rawModeData": "{"people":["bill", "steve", "bob"]}",

"headers": "Content-Type: application/jsonnAccept: application/jsonn",

"method": "POST",

"pathVariables": {},

"url": "https://jsonblob.com/api/jsonBlob",

"collectionId": "6fd141b6-1ef7-ea36-6fb8-917f5d4e938b"

},

{

"id": "59665941-3886-0622-fe29-6d4d4b02e4ee",

"name": "Delete a Blob",

"dataMode": "raw",

"headers": "Content-Type: application/jsonnAccept: application/jsonn",

"method": "DELETE",

"url": "https://jsonblob.com/api/jsonBlob",

"tests": "if (responseCode.code === 200) {n tests["Successfully Deleted"] = true;n}nelse {n tests["Blob not found!"] = false;n}",

"collectionId": "6fd141b6-1ef7-ea36-6fb8-917f5d4e938b"

}

]

}

How can we make the Collection Format better?

If you are new to the collection format, you might have a number of questions. And that is exactly what we started out to solve. The lesser the number of questions one has, the easier it would be to consume the format. As such, we laid down the end objectives of the new format:

- The format must be human readable and writeable with an intuitive structure.

- Workflow first approach: using this format and the tooling around it, developers and organizations should be able to service the entire API lifecycle – design, development, documentation, testing, deployment and maintenance.

- The format must be highly portable so that it can be easily transported between various systems without losing functionality.

- Extensibility should be built into the format so that tooling built on top of this would be easy to implement.

Using the above core principles we set out to define Postman Collection Format v2. The draft structure of the new format has been outlined as a step-by-step presentation format. We will delve into the presentation after summarising the fundamental aspects of the new format. If you want to skip the techno-blabber, head over to the slides.

Human Readability

Thanks to JSON, the format was already human readable and popular. However, we also told ourselves that we would do our best to not introduce anything that makes it difficult to consume.

The referencing of things with those long and cryptic UID strings had to go. In fact, the referencing concept itself had to become more intuitive. Thus, we adopted the approach of lexical identification of properties in the format. Instead of referring or ordering items using Ids, we chose the path of placing the items that directly signified its context and position.

The property names had to be intuitive and explicable based only its property name, its parent’s name and a little bit on its value type. This would reduce lookups to any form of documentation or cheat sheets.

Breaking down the format to simple units

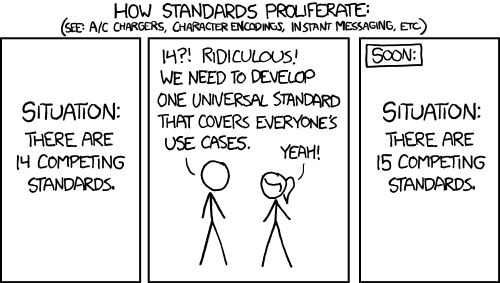

We decided that we are not going to follow the path of the famous xkcd strip on “standards”!

The result was simple—literally! We set out to set a sub-structural rule within JSON. The format would contain a finite number of structures governed by the name of the property (which we will call “units”). For example, the key description, wherever placed, describes the object within which it resides. That simple! We came up with a few initial set of units – request, url, header, data, variables, events, scripts, version, description, etc.

Can custom units (properties) be added?

Indeed! Being a free-form format, custom units can be added. Then again, isolated sources adding unexpected or conflicting units will severely cripple portability of the format across toolchains. To combat that, we introduced the concept of namespaced meta properties.

Free-form meta data within every unit

Any property name in the new format that starts with an underscore character will be treated as a user-defined property (except _postman as a reserved one). These, would ensure that future updates to the format or computations from other tools would not affect custom units. It will also be recommended that such property names be name-spaced using prefixes (that are again separated by underscore.) _mytool_details is an example of a unit called “details” for the “mytool” namespace.

Making the units repeatable

Instead of a galaxy of mnemonics, the idea of having the same name behave appropriately when placed in the right context excited us. This could potentially make a format parser relatively complex, but the idea of keeping things human readable trumped any programmatic challenge.

By that logic, we could place units anywhere we wanted and if it is expected there, it will be processed. If not, there are those oh-so-endearing warning messages to read or… ignore.

Units expand to increase detail of definition

The easiest way to describe this feature is with an example:

{

"name": "My collection of APIs",

"description": "This is a collection of APIs"

}

A couple of sections back we discussed how the description property describes the object within which it is defined.

The aforementioned value of this property, in its minimalistic form, is a string containing the description text. However, if we had to really describe things, we would need to be a lot more narrative than a simple block of text. The description key can be further expanded to contain more data.

{

"name": "My collection of APIs",

"description": {

"content": "<p>This is an example collection!</p>",

"format": "text/html",

"since": "2.0.0",

"deprecated": "3.0.0",

"author": "Some Person <[email protected]>"

}

}

In the expanded form, description text can be in a different format and additional information such as versioning and author-info can be added.

The entire v2 collection format is based upon this expansion principle; making it very easy to create collections with minimal information. This reduces the structural requirements to draft a collection document.

Allow content control using variables

One of the coolest feature of Postman Collections is its ability to refer to variables in its definition. Variables allow one to write compact API definitions and place repeated or replaceable contents in form of variables.

The current (v1) format contains variable names as part of the definition. For example, an API endpoint can be defined with variables as part of itself: http://example.com:{{port}}/{{version}}/my-route/. The value of these variables are part of a totally separate feature called environments. In the draft v2 format, we merged these two concepts by adding a new unit called variables.

Variables, in v2 format, can be stored within the collection definition itself. These collection level variables can be referred from anywhere within the collection – much like how variables can be referred from within bash scripts or sass/less files. This adds a lot of power and versatility to the format. Things such as test scripts, event handlers, and text references can be stored as part of variables and used across the collection.

By design, the contents of the variable are like units themselves. This imparts the same versatility as the rest of the structure. The variables are designed to have routines to convert to string and have the ability to be included as part of the value of another unit.

Decoupling units and allow named cross-referencing

A very large collection file can indicate one of two aspects – lots of data or lots of unmanageable data. With the lexical identification concept, it may be very easy to lose context within multi-level nesting or when traversing a very lengthy array in the JSON.

A more pleasant way to better manage things would be to organize properties in specific arrays and refer to them from wherever they were needed. We knew that other formats and markups have solved this by using named identifiers. For example, HTML uses #element-id hash of URL for referencing to named anchors and SVG uses the same to refer to gradient elements. Taking inspiration from this, we utilized our variable referencing concept to connect distant objects.

We chose @ as a control character to indicate that instead of referencing to the content of a variable as string, it would replace the target with source data type, instead of the general target data-type conversion.

{

"variables": [{

"value": {

"protocol": "http",

"domain": "example.com"

},

"type": "url",

"id": "var1"

}],

"target": "{{@var1}}"

}

The result of variable processing would be:

{

"target": "http://example.com/"

}

With these changes, what does the new format look like?

The road ahead

As we proceed with drafting this format, the aim is to first cover all functionalities of v1 format and then add additional units to cover aspects of finer API definition, API documentation, testing, etc. Simultaneously, we will develop converters to transcode v1 and v2 formats and integrate them with as many toolchains that help API development.

Update 2-Jul-13: JSON Schema released for Collection Format v1

If this format indicates a positive step towards solving any long-standing issues, or if there are suggestions that would improve the work, feel free to mail us at [email protected] or comment out here. Better still, if you’d like to contribute, we would be happy to have you as a collaborator.

There are some excellent leaps forward in this new collection format. I can see myself writing/editing collections instead of constantly overwriting them with postman. There is one place that you didn’t address, that might be worth some time, The Scripts. if they are just escaped text blobs with no structure, they become extremely hard to code review. If these are to become maintainable, when someone makes a change, being able to see what the change was clearly is important, code review tools usually make this clear, the current format (and the proposed format) make reading the proposed changes to the scripts extremely hard to do in the review tools. I usually have to load the script into postman to read it but then I’ve lost the context of what was there before the change.

Just some thoughts about one place that I feel the collection format needs improvement. I love all the other changes, they will definately make organizing and setting up tests easier.

Hi Jason. We are really glad that you feel this is going in a direction that would benefit the general workflow of working with APIs.

The scripting ability within Postman has been a useful tool for many and there has been a number of upgrades proposed to it by Postman users. One of them is the ability to better source the scripts and also to re-use scripts across requests.

In this draft format, we also realised that the scripts should be empowered to deal with arguments and return – making them at par with any form of scripting host. As such, the concept of “events” was introduced. The event driven paradigm would be universal and easy to adopt.

“test” and “prerequest” are events that collection or request execution is supposed to trigger (with arguments and expecting return data). The events can have an anonymous script defined in it or can refer to a named script defined within the root “scripts” array.

This “scripts” array has the ability to define a script with its interpreter type and body and also should be able to “source” script from URL, git, SSH, etc – at least that’s the plan. 🙂 http://hastebin.com/xudidovoqo.tex

Nevertheless, the sourcing part is slightly tricky owing to a number of security and communication issues. For one, it is extremely difficult to source local scripts from within the Chrome sandbox. However, with the “sync” service we introduced (see post: https://blog.postman.com/2015/06/02/getting-started-with-postman-team-sync/), it might be easier to perform these communications from a server level and then pushed down to Postman App.

🙂

you should be able to break the scripts into lists of strings for the storage format and then convert back to blocks of text on import and be able to do the same security/checking as you do now. It would improve the readablity of the scripts in the files and make code review a lot easier.

Array of lines?

“exec”: [

“line 1;”

“line 2;”

]

I think so, here’s a real example:

array style:

“tests”:[ ‘// Testing script’,

‘tests[“msid is created”] = responseBody.has(“msid”);’,

‘var retData = JSON.parse(responseBody);’,

‘postman.setEnvironmentVariable(“agid2”, retData.data.agid);’,

‘tests[“Status code is 200″] = responseCode.code === 200;’,

”,

]

current text block style:

“tests”:’// Testing script /ntests[“msid is created”] = responseBody.has(“msid”); /nvar retData = JSON.parse(responseBody); /npostman.setEnvironmentVariable(“agid2”, retData.data.agid);/ntests[“Status code is 200”] = responseCode.code === 200;/n/n’

Personally I think the array is easier to read, easier to write and much easier to review.

After having giving this a discrete thought, statement array in script seems to be a good choice like we’ve discussed here.

I will keep you posted.

Thank you. One of the things I’ve liked about postman is that I’ve always felt listened to. I think it’s really important that it’s realized that while sync is great, but sometimes you want to be careful with what changes are allowed. The lock is great for that, but it puts a bottle neck on who can edit the collection. Most larger teams use some sort of code review tool for their code, so it makes sense to ensure that they can easily review their collections also.

Really like the new format, like addressed here above a better handling on scritps->exec is something that would really benefit me.

I take the test and import them to jira/xray and for now it is only achievable with regex.

Otherwise keep up the good work.

I read the whole article, slides and the other comments. I like the new format.

Avoiding code duplication (in Tests) is an important aspect to me.

For example I want to have the code to check the schema of a response in one place (instead of copy it to every request).

When I look at your new file format. This should be possible, since the scripts can be defined once, and then referenced with the Control character “@”.

I definitely agree.

But can you tell if these defined scripts are handled within current Postman version?

One feature missing in the v1 schema and associated tooling is the persistence in Postman and redirection in a Newman environment of test files sent with form-data and binary POSTs and PUTs. Files can be chosen in the Postman interface but when saving the collection (for later use in Newman) only the file name is saved in the form case and nothing is saved in the binary case. All file names are lost between runs of Postman. I understand the problems doing this in a browser environment but at least the name should be saved in both cases. Newman should then look for the files in the same directory as the collection file. This is minimal functionality, more complex schemes are possible.

One point further, when importing a collection file and exporting it back to disk, collection id, folders ids and requests ids may have changes. Leads to very annoying comparisons.

We are thinking of fixing that in the following way:

1. auto generated ids are removed from the collection on export.

2. only the auto generated id of the collection is retained as (_postman_id field) and is used to identify same or similar collections

Does that break any use case that we do not know of?

Just thinking about some poor situations, having multiple requests with the same name in different folders of the collection… Won’t guid removal break these?

(Simple workaround though, renaming the requests so that there is no duplicate. Can be long though in a 500 requests collection.)

Wouldn’t it be possible to retain imported guids instead of regenerating new ones?

Could be kept along binary files name and path used on POST requests.

The new collection format does not depend on ID for anything. As such, we could have a place to edit the id of the collection in app. And as such they will not be auto generated ids and will be retained during export.

Thoughts?

Sounds great 🙂

Can I run collection v2 format with newman?

Hey Dev, you can indeed run a collection in the v2 format with newman v2.0.0 and above. More comprehensive information on the history of changes to newman is available here: https://github.com/postmanlabs/newman/blob/develop/CHANGELOG.md

The main problem of collections file is readability. Human can’t quickly review the updates in VCS as an export produces many unwanted changes. Each request ID is changes for some reason…

What is the difference between version 2 and 2.1? Are there any breaking changes between 2 and 2.1?

Does anyone know how the 2.x format handles URLs? On export, there are several attributes to each url: `raw` (the whole thing), `protocol`, `host`, `path`, `query` (exploded sub-parts). I have a collection of a hundred or so that I want to variable-ize the host part. It’s a pain that it’s defined as

“host”: [ “foo”, “com” ]

when I just want to create a simple sed- or jq based rewriting rule.

At time of testing Postman 5.5.3 on OSX seems to ignore the `raw` attribute and require the other parts.

Hi, I am using node and documented my app with postman, and I want to provide a service rest app to an another app which wants an wsdl file description to consume my web service. Can I obtain this file from postman?

I am trying to transform a few collections from v2 to v1 using CLI:

_postman-collection-transformer convert -i ~/work/user.json -o ~/work/user2.json -j 1.0.0 -p 2.0.0 –pretty –overwrite –retain-ids_

the conversion happens successfully but the “items” don’t get populated in the response v2 collection from the “requests” in v1 collection. Please advice!

Hi Surbhi, Please contact our support team at http://www.postman.com/support and they’ll be able to help you.🙂

does postman change the export version in future