The case for “developer experience”

This piece first appeared in Andreessen-Horowitz’s Future publication for September 2021. At the time, I was founder and CEO of Akita Software, an API observability company backed by Andreessen-Horowitz.

This piece was the culmination of a series of posts I had written on the Akita blog as I was wrapping my head around what our goals were around developer experience. All of these posts came out of my desire to get at one main idea: developer experience is a much more nuanced complexity than tools that “make the details go away” imply. I had been nervous about putting all of my thoughts together in such a long essay, but a lot of people wrote to me afterwards saying they had been thinking about similar ideas and never saw it all put together in one place.

Since the original publication of this essay, Postman acquired Akita. This blog post represents a shared vision between Akita and Postman. If you want to see what we’re now working on together, you can sign up to be an early user of the Akita team’s beta Postman Live Collections Agent here.

There’s been a lot of buzz around APIs, “no code,” and “low code”: developers can add new features more easily than ever before! While it’s true that this new wave of tools has helped developers build bigger systems, and build them faster, it turns out that developers are now spending their time worrying about an unintended consequence: how exactly are they supposed to manage and coordinate these fast-moving, heterogeneous systems—and free themselves to build?

Empowering developers to build matters more than ever before. The number of developers is currently larger than the population of Australia, growing faster than the population of Brazil, and set to exceed the population of Canada. As every company continues to become a technology company inside—regardless of product or service—developers are getting more of a say. Much like we saw the rise of user experience (UX) and the field of interaction design emerge when computing began mainstreaming decades ago—from command line interfaces to the “GUIification of everything” to, over the past decade, the rise of design—we’re now seeing the rise of developers: as buyers, as influencers, as a creative class.

But because of our fascination with simple stories, we’re currently missing the bigger picture, and bigger opportunity here, to realize the full potential of developer experience (DX). As an industry, we’ve been so caught up in finally having the spotlight on developers, and on developer tools, that we’ve simplified the narrative around what developers want. What I mean by developer experience is the sum total of how developers interface with their tools, end-to-end, day-in and day-out. Sure, there’s more focus than ever on how developers use and adopt tools (even in consumer tools), and there are entire talks and panels devoted to the topic of so-called “DX”—yet large parts of developer experience are still largely ignored. With developers spending less than a third of their time actually writing code, developer experience includes all the other stuff: maintaining code, testing, security issues, addressing incidents, and more. And many of these aspects of developer experience continue getting ignored because they’re complex, they’re messy, and they don’t have “silver bullet” solutions.

Good developer experience is not so much about the Steve Jobs come-live-in-my-world design mentality so much as it’s about accepting and designing for the inevitable evolution of software and tech stacks—organically evolving ecosystems, not centrally planned, monolithic entities—which means that developers work in messy, complex environments. So where do we go from here? How can we redefine and expand our notion of “developer experience”—and the way we build, buy, and use developer tools—so the tools fit the way developers actually work, helping them build and innovate better?

The core conflict of developer tools: abstraction vs. complexity

I’ve been working on developer tools for over a decade, and programming for even longer than that. But I only recently realized the core of what’s holding us back: that most of the conversations around developer experience are about how to make it easier to write new code, in a vacuum… when in reality, most developers are writing new code that needs to play well with old code. This goes beyond the obvious complaints of technical debt, lack of explainability, and other issues.

My major revelation was that there are actually two categories of tools—and therefore, two different categories of developer experience needs: abstraction tools (which assume we code in a vacuum) and complexity-exploring tools (which assume we work in complex environments). Most developer experience until now has been solely focused on the former category of abstraction, where there are more straightforward ways to understand good developer experience than the former.

When I say abstraction, I’m referring to the computer science concept of making models that can be used and reused without having to rewrite things when certain implementation details change. Here, I’m using the term “abstraction” to refer to a broad category of tools—from API providers to SaaS infrastructure to programming languages—that simplify tasks “away.” Such abstraction is the driving force behind the growth of the API economy (think Stripe and Twilio), and has also been celebrated in the recent no-code movement.

Abstraction, it is argued, is A Good Thing, because it makes it easier for everyone to create software. It is also the force behind the desire for that elusive silver bullet. But for developers working within real-world, messy ecosystems (as opposed to planned gardens), there’s a Dark Side of abstraction—that such silver bullets do not exist.

It’s certainly possible, for some problems, to automate the problem away. But other problems (for instance, finding and fixing bugs) cannot be fully abstracted, often requiring user input. On top of that, at some point when running a system, you’re inevitably going to need to cross an abstraction barrier (like, say, call out from your language across the network, or reach inside the database). Moreover, different problems require different abstractions—which means there’s not going to be one single abstraction to address all your needs.

I first ran into this dark side of abstraction when I created a programming language as part of my PhD dissertation—a language called Jeeves that automatically enforced data authorization policies. It seemed like a noble goal, freeing the programmer to focus on the rest of the software, instead of spending time writing checks across the code, enforcing who is allowed to see what. But when I tried building a web app using a prototype of the language, I called out to the database and immediately realized that the abstraction I had built was a lie: my language only enforced its guarantees in the jurisdiction of its own runtime, and the guarantees did not extend to database calls. I had expected a programming language to solve the problem of unauthorized access, but web apps have databases, frontends, and, increasingly, remote procedure calls to other services and APIs. Even if it were possible to corral every component of a system into a single, unified policy enforcement framework, there’s the additional catch that most software teams don’t know what the policies are supposed to be in the first place.

What I needed here wasn’t more abstraction, but to embrace the complexity of the software and tech stacks. Seems counterintuitive, given the tailwinds and trends for where software and developer experience have been going (services, no code, more). But in many cases, it’s far more empowering to the developer to help them explore and embrace existing complexity, rather than introduce more complexity when trying to automate things “away.”

Developers work in rainforests, not planned gardens

In many cases, what developers need is tools that help us find and fix issues in their existing software systems, created using the existing tools. But wait, Jean (you might be thinking)—there *are* lots of tools out there that help us embrace complexity! If you use GraphQL, you can map out your API graph using Apollo. If you use an API gateway, you can not only understand how all of your APIs are talking to each other, but enforce policies about how APIs should be talking to each other.

Here’s the thing, though. The effectiveness of these tools lies in being able to switch over to new tools—and the success of a complexity-exploring tool lies in how seamlessly it can work with existing tools.

Today, most complexity-exploring tools are still built on the underlying assumption that it’s possible to put all your software into one language, framework, or even a single unified tech stack. But that assumption is becoming increasingly outdated! When software was small in size and still relatively simple, it made sense to think of tech stacks as planned gardens. Today, the rise of APIs and service-oriented architectures, bottom-up adoption of developer tools industrywide, and the aging of software systems has led tech stacks to become organically evolving ecosystems, for better and for worse.

Software tech stacks today look way more like a rainforest—with animals and plants co-existing, competing, living, dying, growing, interacting in unplanned ways—than like a planned garden.

So if I were to coin a law, it would be this: any system of sufficient size and maturity will always involve multiple languages and runtimes. Software is heterogeneous, and until we as a community accept this fact, we’re upper-bounding how far we can get with developer experience. I call this The Software Heterogeneity Problem, and it has significant consequences for software development, management, and performance.

The Software Heterogeneity Problem

Here’s how the Software Heterogeneity Problem came to pose one of the biggest challenges to good developer experience today: even the simplest web app has a front end, an application layer, and a database. As the needs of a system evolve, along with the tools available, tech stacks inevitably become messy collages of tools, full of their own languages, technologies, and workflows.

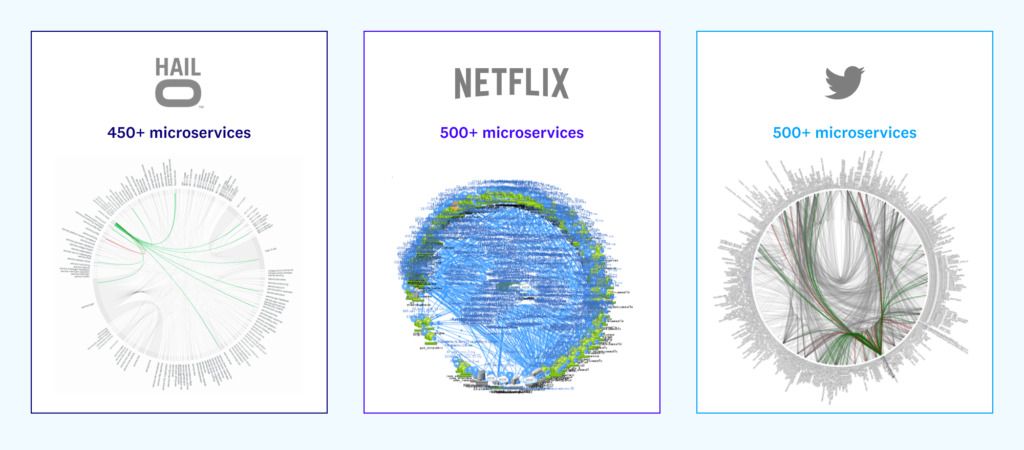

Moreover, high-performance technology organizations from Netflix to Amazon to Uber are increasingly adopting complex Death Star-like systems with thousands of microservices:

One implication of all this is that testing software—as a way to discover if source code violates a specification—makes less and less sense in isolation.

More generally, tools that give automated software assurance to one service at a time become less effective in scope given the messy realities they live in. For example, static code analyzers that work by semantically modeling the code assume the worst when checking across network calls, so they don’t have much utility for reasoning about cross-service interactions.

But it’s not just in these types of systems where the utility of application-level software quality tools is lost. The minute that code calls any code outside the known system, the scope of code-level solutions becomes greatly diminished.

And even though it’s deceptively easy to push out an update to a SaaS product, active and ongoing interdependencies can make SaaS applications much more painful to update than the shrink-wrapped software of ye olde. For instance, cross-component syncing—like coordinating data type changes across API consumers and producers—is becoming more and more of an issue. One study of which bugs cause high-severity incidents (that occurred recently during the production runs across hundreds of Microsoft Azure services) found that 21% of the cloud outages resulted from inconsistent data format assumptions across different software components and versions. This is not surprising, as changelogs are currently huge walls of text. (See Shopify’s changelog, an example of the state of the art.) Typed interface description languages can only help to the extent that there is cross-organization standardization (rare to see), and if developers provide more precise documentation of data formats than “string.”

The consequences of software heterogeneity: down with SDLC, up with observability

The rise of SaaS and APIs has led to more decentralized system “design” and more “testing” in production. Services now come and go in the service graph, with emergent behaviors arising from new interactions. This means that the software development life cycle (SDLC) as we know it is dead… even though it persists like a zombie in many enterprises today.

The traditional notion of a software development life cycle as something linear, organized, lockstep—that it starts with design, moves to implementation, then testing, then maintenance—is no longer true. Today, software development happens far more nonlinearly, organically, and bottom-up than before. Ironically, the rise of SaaS and APIs—meant to simplify things and free developers to innovate on the core—have made it harder to make changes to software. (I’ve written before about how APIs in practice can be both the illness AND the cure.)

So what to do then? How do we adapt to the modern rainforest?

If our notion of “what is supposed to happen” is getting weaker, then developer experience becomes about helping developers and IT teams understand what their software systems are doing in the first place. We can’t see what is supposed to happen, so we need to see what IS happening. This means we need to shift from a mindset and approach of monitoring to a mindset and approach of observing—which in turn suggests that the future of developer experience hinges on better experience of observability.

Most people think about observability (and devops observability tools) in terms of the “three pillars” of logs, metrics, and traces. But that’s like saying programming is just manipulating assembly instructions, when it’s actually about building the software functionality you need. Observability is about building models of your software so you can build software more quickly. Today, observability tools give developers logs, metrics, and traces—and then developers build models of software behavior in their heads. While these tools certainly help make sense of those tech-stack Death Stars, the granularity and fidelity of the information they provide is only as good as how well developers can instrument their code and/or get the appropriate logs, metrics, and traces.

Current tools largely work for software systems that a developer owns and is willing and able to instrument. As observability tools evolve, we’re going to see more and more of the model-building move into the tools themselves, freeing developers to understand their systems without having to hold those models in their heads. And to get there, we’re going to need to see a lot more innovation in developer experience around such complexity-exploring tools.

So what does this all mean for designing developer experience?

We’re at a crossroads here: These next few years are going to be crucial for defining the future of developer experience. The developer experience category can either be limited by needing to tell a good story for CxOs—focused more on things like increasing short-term speed of feature development—or it can expand to where buyers spend money that matches where developer pain actually lies.

Since the tools follow the capital, let’s take a quick look at where we are today. There’s a popular narrative out there that companies don’t pay for developer tools, and that it’s hard to build a big business around developer tools. But this has been changing, as we saw with the acquisitions of Heroku and GitHub, as well as the value of companies like HashiCorp and Postman.

So what sets apart the tools that “make it” from those that don’t? The answer involves a discipline that’s often considered a dirty word among technical developers: design. By “design”, I mean reducing friction to help developers get to where they need to go. I don’t mean increasing prettiness or dialing up the trappings of user experiences with things like cute error messages, notifications, or dark mode. Nor do I mean developer “ergonomics,” which values moving faster and more efficiently through slick interfaces than a fuller notion of meeting developer needs and fitting into their workflows.

Since meeting developers’ needs is much easier if you’re automating away functionality, it makes sense that the tools that have been catching on are abstraction tools. In this case, good design is often synonymous with good product ergonomics! But design for complexity-exploring tools—which is more about meeting developers where they are—means digesting larger parts of the rainforest that are the developer’s ecosystem. This expanded notion of developer experience is much harder to achieve, but if we want the right tools to catch on, we should strive for better, more context-aware design here.

Truly absorbing design into developer experience therefore requires a few key mindset shifts, especially for developer-tools purists:

#1 Focus on the problem being solved

Especially for the kinds of cool technologies that could fill the complexity tooling gap, I often see an emphasis on technical capabilities—the features, the benefits, the specs—rather than the problems those tools are solving. Not only that, it’s considered acceptable to have a very shadowy, merely hypothetical picture of the user. Case in point: people talk about the pillars of observability as logs, metrics, and traces, instead of about goals like “understand your system behavior” or “catch breaking changes!”

Instead of adapting to the reality of rainforests, tooling enthusiasts often keep pounding away a worldview of software as planned gardens. Take the case of programming languages, where functional programming enthusiasts will make arguments about how their languages are better for developers for technical reasons (more guarantees, elegance)—yet aren’t related to the high-priority problems that software teams are experiencing. (This also has implications for why more “deep tech” tools aren’t making it out of academia and into startups.)

But surely developers would want the cool new tools, if only they knew about them? Software developers may want beautiful code and zero bugs, but what they need is to ship functional software on a schedule. In reality they need to be able to write mostly bug-free, okay-looking code—and to write it faster. They’re under pressure to meet their sprint goals and to address issues that arose.

#2 Focus on fitting into existing workflows

It’s not that developers don’t “get” how cool the technology is, but that they don’t get how it can help them with their top-of-mind problems. Or, that those developers can’t reasonably transition from their workflows onto a completely new workflow.

When I asked developers why they adopted tool X or Y, the answer was often that it worked with their programming language or infrastructure, or that it had the Slack/GitHub/Jira integrations they wanted. Anyone doing user research interviews with developers will quickly realize how much of an ecosystem each tool lives in—not just isolated workflows.

A lot of developer tools also assume a developer will switch to an entirely new toolchain to get a relatively small set of benefits. For most software teams, this is a nonstarter.

Instead, we need to focus on more interoperability with existing dev tools, as well as on more incremental improvements (yes) that aren’t a so-called paradigm shift but that actually work with what exists. For software analysis, this means focusing less on building a new universe than on meeting developer workflows, where they are. For observability tools, it means requiring less buy-in to a specific framework (for instance, OpenTelemetry), and doing more work to meet developers where they already are.

#3 Focus on packaging and prioritization

If you’re one developer running something a few times for the purpose of showing that something is possible, then it’s fine for the output to be clunky, for you to have to query over it, or for you to have to hand-beautify it in order to understand it.

If this is a tool you’re going to be using day in and day out, however—or sharing the results with your team—then taking the time to better package it makes a huge difference. This packaging not only smooths out the rough edges and makes it easy for you (and others) to see the necessary output, but it also makes it easy for you to do what you want with the result. After all, the result is the first step, not the last.

However, this does not mean that the goal of design is perfection! For instance, many developer-tools enthusiasts and purists argue for the value of “soundness” or zero bugs, that is, that the tool be able to find the bug. Sure, if you’re building a spaceship where a single bug means that you lose lives and millions of dollars, it makes sense to go through possible bugs with a fine-toothed comb. But for the average web app, there’s a big tradeoff between fixing bugs and shipping features. When it comes t0 observability tools, for example, developers don’t actually need to observe every aspect of their systems. Instead, they just want a prioritized view, one that gives them visibility into what matters. Prioritization matters far more than soundness, bugs, or comprehensiveness.

The above design principles may seem obvious, but when it comes to developer tools, the fact is that they’re simply not considered enough! If we are to achieve a first-class field of developer experience, we need to do a lot of things better as an industry.

Where do we go from here: developers, buyers, the industry

It’s easier for developers to create functionality than ever before, but software teams are going to get increasingly bottlenecked on chaos in their software until we have better ways to observe and understand their systems. Right now, we’re in between acknowledging that we need better ways, and between people agreeing the problem is solved (or denying it even exists). So now is the time to define and push developer experience across multiple stakeholders.

For developers, we have a lot of language for things being “easy,” “one-click,” and “like magic” as associations for what constitutes good developer experience—because the tools that do this are the ones making money. The non-automatable parts of tools become the elephants in the room, weighing down developer experience. Both tool creators and tool users assume a high learning curve, which limits the ultimate impact and usefulness of the tools because there are alternatives that don’t need to be hard to use.

Since buyers prefer the “super easy” tools, it’s easy to fall into these polarized extremes where things are either “super easy” or for “the hardcore.” But problems only get fixed if we acknowledge them, expect more, and build a better developer experience. If developers are influencers, why don’t we demand better for ourselves, for the industry?

I see a lot of frameworks and APIs get held up as great examples of design, yet many wonder why their debuggers, performance profilers, and observability and monitoring tools can’t provide the same experience. It’s like we’re still in the old-school software era of developer experience—the Oracle to the Salesforce, the Salesforce to the Orbit—for complexity-revealing tools. On top of that, many of the “complexity-revealing” tools today are often assumed to be just for “experts.” But in fact, they are meant to aid developers in solving problems themselves, by revealing the necessary information. While complexity-exploring tools can’t automate the problem away, they can focus on providing the developer with the appropriate information to solve the problem themselves.

To be clear, the best tools will always combine abstraction with revealing complexity. Think of it as peeking under the hood of a car: even if you drive a super low-maintenance car, it’s still important to be able to peek under the hood if there’s a problem (and without needing to go back to the dealership).

For users, prioritizing developer experience means recognizing the importance of complexity-exploring tools—and voting with your usage. It also means being more willing to try tools that are a little rough around the edges and give feedback—it’s hard to create a good developer experience for something that hasn’t existed before!

Let’s stop dreaming about the “One Language to Rule Them All” and start demanding better tools to improve developer comfort—and, therefore, developer productivity.

For buyers, whether CTOs and CIOs or IT and engineering leaders, prioritizing developer productivity means being skeptical of the simple-sounding “silver bullet” solutions, and not looking the other way when other major developer needs are going unmet. Buyers need to get out of the mentality of thinking of developer tooling as only abstraction tools: infrastructure, APIs, and other bricks or potted plants that fit nicely into the “planned garden.”

Are we going to keep accumulating more ways to build faster, while relying on developers to hold the complexity in their heads, for bigger and bigger systems? Or are we going to crack the magical, just-right developer experience for complexity-embracing tools?

No matter what, developer experience for such tools is going to be the thing. Having good complexity-exploring tools is going to be a crucial competitive advantage—and having good developer experience for exploring complexity will be a key advantage for developer tools. These tools will change how software gets made…but it’s about time the software industry changes how it values these tools for us to innovate on, and with, them.

What do you think about this topic? Tell us in a comment below.