Meet Matrix: Postman’s Internal Tool for Working with Microservices

Postman’s engineering team adheres to a microservice architecture, which revealed a set of challenges the more we expanded our team. A microservice architecture means multiple parts of a single request can be fulfilled by multiple services. These services can have owners on a different team altogether and different system requirements, which makes running everything in a single system difficult.

A solution? To increase developer productivity and decrease lead time for changes, we added a new tool—called Matrix—to Postman’s development loop.

Plug into the Matrix

Matrix is a command-line interface (CLI) tool that lets developers easily plug their local environments into the Postman beta environment. After installing Matrix, developers simply need to start their local service, and—voila!—everything works as if it were based in their local system.

When we started building Matrix, we focused on two important capabilities:

- Developers don’t need to know about or configure any authentication mechanisms used for inter-service communication.

- Data between the local system and beta environment should be consistent.

We also wanted to avoid re-architecting the entire system if we had to add features, with the obvious constraint that we couldn’t run 40 microservices in a developer’s local system. We needed a way for the local development environment to plug itself into the beta environment and behave as part of the Postman service network.

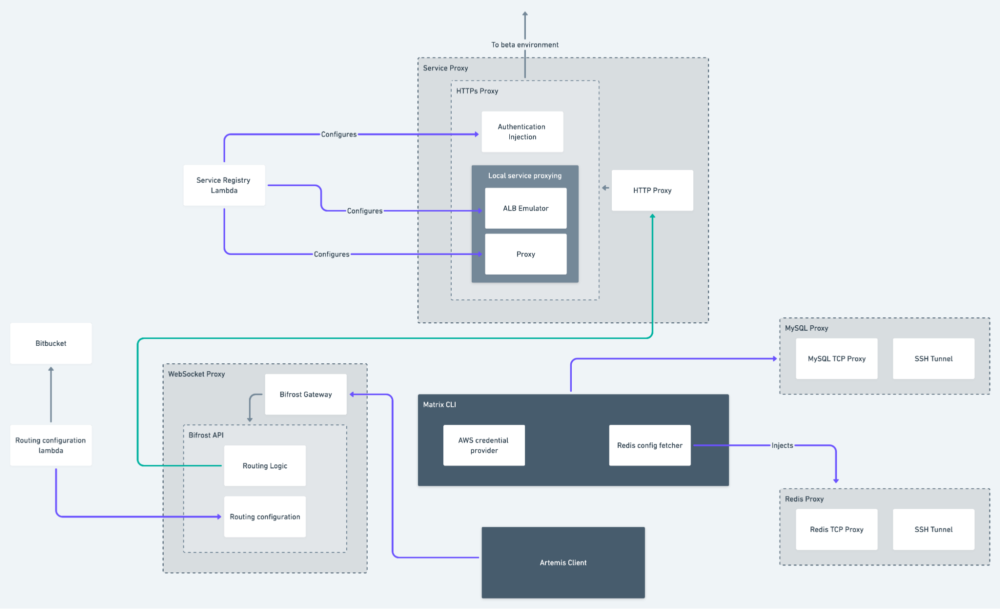

So the base Matrix system consisted of two blocks, apart from the CLI:

- Service proxy to interact with the beta environment

- Database proxy to interact with a common datastore

Service proxy

The service proxy is responsible for intercepting any outbound HTTP request from the local build of the service, injecting the required authentication mechanism into the request, and forwarding it to the final target. Pretty straightforward.

This component had to also simulate a path-based routing, which we use in our cloud infrastructure if running two local services that need to communicate. Consider the simple subdomain sync.postman-beta.tech. This is the entry point for about four services, and the target for each is governed by the path-based routing logic in the application load balancer (ALB). For example, /membership is routed to a Membership service while /notification is sent to the Notifications service, or else it gets defaulted to the sync service.

If a developer were to develop on the Membership service locally and test a flow that involves debugging in the Notification service, the proxy component should be smart enough to know that /notification routes on sync.postman-beta.tech domain should be routed to another local service, while anything else can be routed to the beta environment.

To solve this, this service proxy constantly talks to a service registry and updates its own configuration in real time without involving the developers.

Database proxy

The second challenge we had to solve was ensuring local services could connect to the beta MySQL database. Once a local service is plugged into Matrix, it needs to use the database used by the beta Postman services, as some flows require changing data in multiple databases. If one part of the system is operated from a local database, inconsistencies and orphan entities may arise.

For example, let’s again consider the Notifications service. Any requests to this service first need to be authorized using an Access Control service. With Matrix, the developer would run the Notification service locally while Matrix would take care of sending requests to the beta environment of the access control service to authorize the request. This means that the access information should be shared between both the local and beta environments.

Adding to this, we couldn’t directly connect to the beta database because it was network isolated inside a virtual private cloud. A straightforward solution would have been to open an SSH tunnel via a bastion host. It would have worked but would have required that developers use the database credentials for authentication. This carried a risk that developers could accidentally commit the credentials to the code repositories.

The solution we chose was a custom TCP proxy that uses one set of credentials—which isn’t shared with the developers—to connect to the database while developers could connect to the proxy without understanding any authentication mechanism. We found https://github.com/flike/kingshard to be the best solution, and we baked this into the MySQL component. Now developers were able to connect to the beta database using localhost at port 3307 with no credentials.

Modularizing the Matrix

The above two components worked for the initial set of users, but we had to design these so that we could add components to the system without interfering with the existing ones. We ended up choosing Docker as our solution.

Another constraint was that the service proxy and database proxy both required some form of secrets/credentials to connect to the rest of our infrastructure. We couldn’t just hard code the secrets in the containers; we had to somehow inject these into the containers on runtime.

Another thing to consider was that the control of ALB routing (used in the service proxy) was in the hands of the individual squads themselves, which could change at any time. Also, new services get added to the Postman service network from time to time. All of this had to be reflected in the Matrix without developers spending half their time updating Matrix components.

To solve these problems, we made Matrix quite modular, with each small part acting as independently as it could:

- Matrix components are Docker containers, and the Matrix CLI’s job is to start the Docker containers while injecting any required secrets into the containers.

- Whenever we made new components or modified any existing components, we packaged them to a new Docker container version and updated a Docker-compose file, which was stored remotely. The Matrix CLI would download this Docker-compose file on every start or restart operation by default, and the developers could get up-to-date Docker images without running update commands or knowing that something got updated.

- The service proxy in itself also talks with its cloud counterpart to constantly update any credential changes so that developers don’t need to download the containers repeatedly.

Due to the modular architecture of Matrix, we were able to develop and ship more than five containers in a span of two months, and more are in the pipeline. The current state of the Matrix looks like this:

Taking care of AWS credentials

As squads started using the above two components, we got feedback that one major pain point they were facing was using AWS SDK locally. So we added the support to make AWS SDKs work seamlessly in the service codebase without the developers needing to take care of Access Keys, Secret Access Keys and Session Tokens.

For understanding how this component works, we need to talk about how AWS SDK handles authentication. In general, AWS SDK looks for access keys using CredentialProviderChain. The default order of checking is noted in its documentation.

AWS.CredentialProviderChain.defaultProviders = [

function () { return new AWS.EnvironmentCredentials('AWS'); },

function () { return new AWS.EnvironmentCredentials('AMAZON'); },

function () { return new AWS.SharedIniFileCredentials(); },

function () { return new AWS.ECSCredentials(); },

function () { return new AWS.ProcessCredentials(); },

function () { return new AWS.TokenFileWebIdentityCredentials(); },

function () { return new AWS.EC2MetadataCredentials() }

]

Here, we selected AWS.ProcessCredentials(), which allowed us to define an external process that the AWS SDK would execute to fetch the credentials. On a high level, whenever the service uses the AWS SDK, the AWS SDK calls a Matrix command, which returns the credentials. The Matrix command takes care of validating and refreshing the credentials whenever they expire. This allows for a seamless AWS authentication mechanism without squads needing to get involved.

At the end of the day, the Postman engineering team enjoyed finding a solution to the challenges we faced, and we’re excited about the creation of Matrix. After installing Matrix, Postman developers can now simply start their local service and everything can work as if it is based in their local system.

Does this topic interest you? If so, you might also be interested in working at Postman. Learn about joining the Postman team here.

What do you think about this topic? Tell us in a comment below.