Continuous API Testing with Postman

How to test APIs, monitor uptime, and make sure your APIs and microservices run as expected

Most of the content in this article, originally written by Kaustav Das Modak, first appeared on the Better Practices blog.

API Testing: A core part of Postman

API testing is a core part of what we do every day here at Postman. Started as a side project to address challenges in API testing, Postman has grown into a complete API development platform that supports every stage of the API lifecycle.

Why did API testing capture our curiosity? Software composition is increasingly moving towards an API-driven world. Gone are the days of monolith applications – the world moves much faster now and requires a more flexible framework. Building systems as APIs is becoming a business decision, and not just a technology choice. APIs are the building blocks of the large software systems that are being built today, and more and more companies are moving towards an API-first approach.

Ensuring stability, security, performance, and availability are high priorities for APIs, and these shifts have made API testing a first-class objective when building and shipping APIs.

Building APIs requires tight feedback loops

APIs represent business domain requirements. As requirements change, APIs have to evolve to keep pace with what’s needed by users, businesses and consumers. APIs must stay in harmony with other systems – those that depend on APIs and the systems that APIs depend on. They have to be flexible and still not break things as they grow and scale.

We see API testing strategy as a necessary part of the API design lifecycle. Just as designing the interface for a service or product’s API is not an afterthought for an API-first organization like us, so is the need to design a resilient testing system for those APIs.

The design and development of an API-first model requires a resilient testing system that allows you to react to changes. You need to know when your APIs fail, why they failed, and you need a tight feedback loop to alert you as soon as possible. So, how do you go about building an API testing pipeline that satisfies all these requirements?

3 Phases to API testing

Best practice API testing can be broken down into three different phases:

- Writing well-defined tests for your APIs

- Running tests on-demand and on a schedule

- Monitoring alerts and analytics systems

Writing good tests

Testing systems are only as good as the tests they execute – everything begins with well-written tests. For example, when it comes to testing HTTP APIs, you will likely want to test for response data-structure, the presence (or absence) of specific parameters in the response, response timing, response headers, cookies and response status. All of these need good test cases, and these test cases should map to business requirements. These can be user stories, user journeys or end-to-end workflows. You can have test cases documented as a behavior-driven development (BDD) specs or as epics or stories in your product management platform.

Running tests on-demand and on-schedule

Running tests on-demand and on a schedule is the key to continuous testing and a must-have for any continuous integration / continuous development (CI/CD) pipeline. You’ll want to run tests at build time as well as tests on a regular schedule. Your exact cadence will vary based on the scale of your systems and the frequency with which code changes are committed.

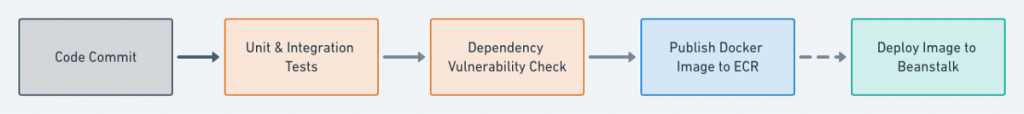

On-demand runs: At build time, it’s important to run contract tests, integration tests and end-to-end tests. How do you define build time? Code changes, merges and release flows are all typical build pipeline triggers. Depending on how your pipelines are set up, you may wish to structure the tests as stages, and run each stage of tests only after the previous stages pass. The illustration below shows how Postman’s continuous deployment pipelines are set up:

Scheduled runs: You would then want to run some tests at regular intervals against your staging and production deployments to ensure everything works as expected. This is where you would run API health checks, DNS checks, security checks, and any infrastructure related checks. For example, you may test that an upstream dependency API responds with the proper data structure, or, verify that your cloud security permissions are in place. You can even test for something as simple as the response time of your API endpoints.

Combining the power of the two: When you do both scheduled and on-demand tests on your APIs, you end up getting complete test coverage of your APIs. On-demand tests prevent broken APIs from shipping out. Scheduled runs ensure they maintain performance standards and retain quality after being integrated with the larger system or in production.

Monitoring alerts and API analytics

Now that you have some data generated from the tests, you want to use the information gathered. The third phase towards a resilient API testing pipeline is connecting testing with API analytics and alert systems.

Alerting systems will let your stakeholders know the moment a system fails. Alternatively, API analytics systems give you a view of system health, performance, stability, resiliency, quality and agility over time. If you follow a maturity model for your services, the analytics system should be connected to the model, as well. These data can enrich the product design and product management roadmap by giving important metrics on what works and what doesn’t. Piping this data back to product management closes the feedback loop essential to API-first development.

Continuous testing with Postman

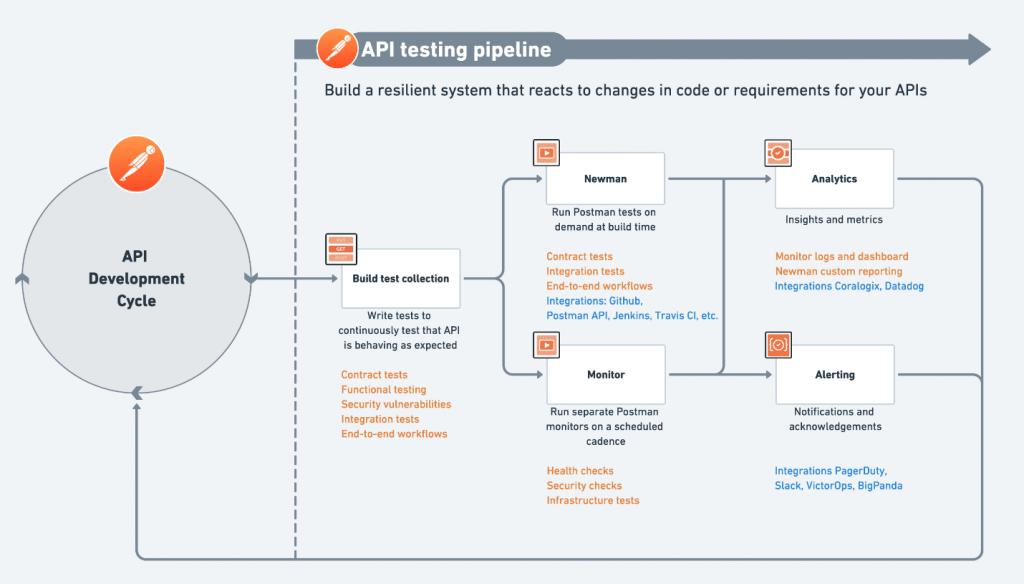

Now, let’s map the three key phases of API testing to the features Postman provides:

Writing good tests — Collections: Writing well-defined tests is where Postman Collections come in. A collection is a group of API requests that can be executed at one time. You can write tests for each request or for a group of requests. Postman tells you how many of these tests pass or fail when you run the collection. We recommend creating separate collections for each of your test suites. For example, you could have a collection that runs contract tests of a given consumer’s expectations against a producer, and you could have a separate collection that runs health checks for that service.

Related: Use the Contract Testing Template

Running tests on-demand and on-schedule — Newman and Monitors: Integrate Newman, Postman’s command-line collection runner, with your systems and run your collections on-demand as part of your CI/CD pipeline. To schedule collection runs on pre-defined intervals, set up monitors in Postman. Monitors can be also created across multiple regions worldwide and run on Postman’s hosted cloud infrastructure.

Analytics and alerting — Integrations & custom requests: To set up analytics and alert systems, Postman offers pre-defined integrations with external services. By tying Postman monitors to your analytics systems, you can evaluate API health, performance and usage over a period of time. There are also integrations with notification systems which can alert you whenever monitor runs fail. Beyond these, you can always include requests in your collection that push data to third-party services. This is useful when you are running your collections on your own infrastructure using Newman.

Check out the following flowchart to see how these concepts work together in Postman:

Wrap-up

An API-first world requires a strong testing pipeline. To commit to APIs as a business strategy, there must be a resilient process that makes sure all the systems play well together, and function as expected. By breaking down the testing process into three phases, and continuously testing APIs, teams can be confident the hard work they’ve put into developing new technical solutions works long into the future.

More resources

Want to learn more? Our team has written extensively on testing APIs, including…

- Learn more about automating your API tests with Postman

- From manual to automated testing: The roadblocks and the journey

- Integration testing in an API-first world

- From manual to automated testing: The roadblocks and the journey

- Consumer-driven Contract Testing

- Conquering the Microservices Dependency Hell

- Snapshot Testing for APIs

Nice article.

I am wondering while putting the test in CICD, the server won’t be running, so how will the test will run? Can someone guide me to clear my confusion.

Hi Samundra! Glad to answer, although I might need a bit more clarification to better understand the question.

If you are asking how the tests would run in a CI/CD system against a service (server) that’s not up and running, then how would the tests work, then the answer is simple. They would all just fail because the service (server) is not running.

Put this in the context of Beta or Stage, if for example, the Monitoring Service was not running, then any API tests we have that run against it would all fail because the service is not running. Let me know if that answered the question, and what else we can do to help!

Can postman execute functional tests written in groovy?

approve

Thanks Chris for your question. Postman executes tests written in Javascript within the sandbox environment, and you can learn more here: https://learning.postman.com/docs/writing-scripts/test-scripts/ I hope this helps, and don’t hesitate to reach out if you have more questions.