Agentic AI: the rise of agents

Last year, I shared our perspective on the generative AI wave and its implications for the future of software. While even a month can feel like a lifetime in the AI age, I’ve been pleased to see our central prediction hold true: APIs are becoming a foundational building block for a new class of generative software applications.

Since that post, we’ve gained significant experience with Postbot—our AI assistant with millions of users—and worked alongside customers who are moving from AI experimentation to building real-world, production-ready applications. In this post, I want to revisit the AI landscape and contextualize its developments for engineering leaders, offering insights from Postman’s unique vantage point.

What’s been happening in the AI world

Many more foundation models

The generative AI tsunami—kickstarted by OpenAI’s ChatGPT—made AI the centerpiece of technological discourse. ChatGPT’s early experience felt almost magical, but since then, numerous competitors have emerged: Anthropic’s Claude, Google’s Gemini, Meta’s Llama, and Amazon’s newly unveiled Nova. While these models push boundaries and forge into new territories—whether through open-source approaches, enterprise-grade security, or specialized industry solutions—the capabilities they advertise are almost interchangeable. Everyone is racing to catch up to OpenAI while simultaneously trying to differentiate.

Sequoia’s article The Rise of Reasoning underscores this competitive dynamic:

“The model layer is a knife-fight, with price per token for GPT-4 dropping 98% since the last Dev Day.”

A more fundamental issue that is yet to be solved is the limited context window of all models. While context window models have grown, ultimately, due to the way LLMs work, their ability to provide answer reaches a limit.

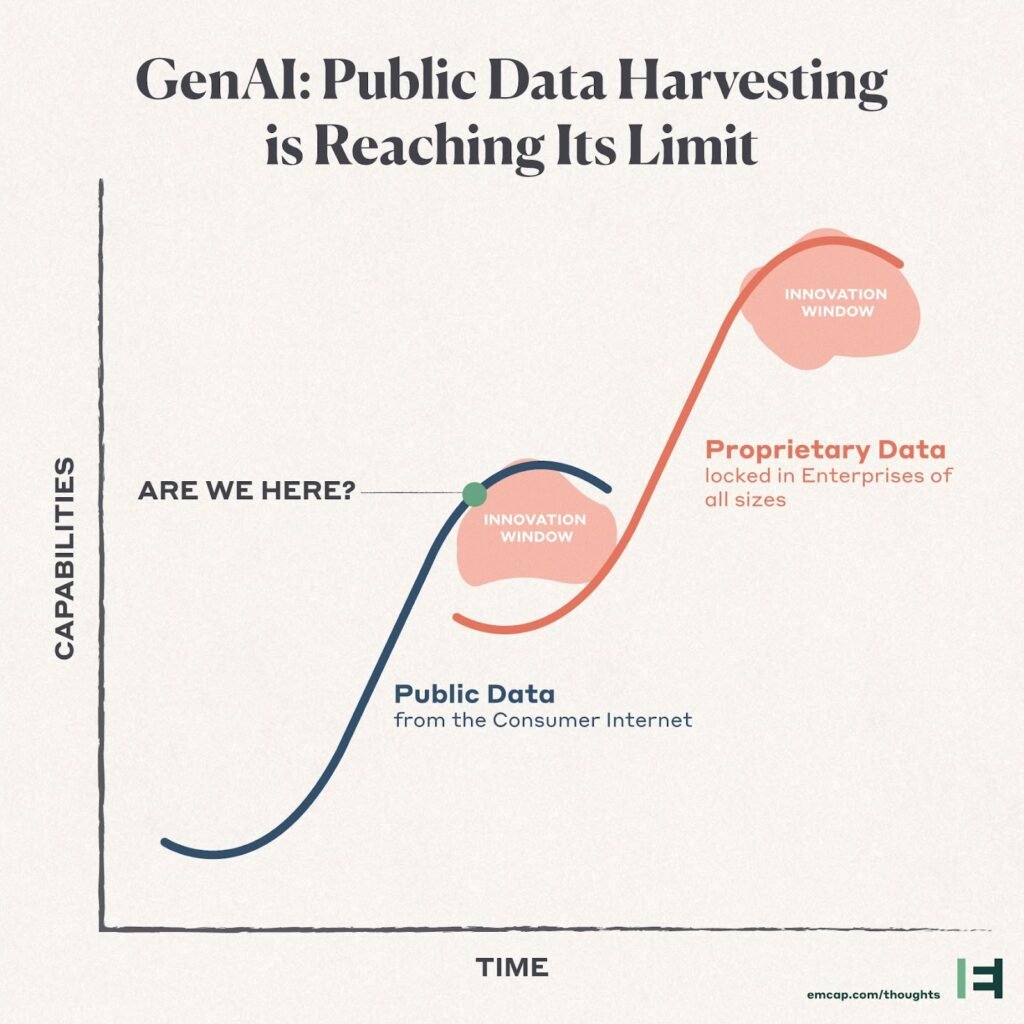

Along with this fundamental limitation, there is widespread consensus in the AI community that the training dataset for these foundation models has been exhausted.

While synthetic data shows promise, recent improvements in model accuracy stem from more than just larger datasets. The proliferation of new models and versions is fragmenting the landscape and complicating the developer experience. As companies race to implement their initial AI use cases, they’re encountering challenges with regression testing and traceability—issues amplified by the non-deterministic nature of generative AI. Meanwhile, a new trend is emerging: smaller, specialized models designed for specific tasks and datasets. These targeted models can operate efficiently whether deployed on devices or in the cloud, creating fresh opportunities for software development. Smaller models can be cheaper and faster as well, helping build experiences that are not possible with just using a large foundation model alone.

Tomas Tunguz explores this shift further in his piece Small but Mighty AI:

“Smaller models trained on targeted data sets might outperform general-purpose giants for niche tasks.”

Getting better high quality data to train models is not the only way though.

Improving model accuracy: fine-tuning and RAG

At last year’s PostCon, engineering leaders highlighted a clear challenge: the gap between AI prototypes and production-ready systems, with teams spending significant time evaluating and testing models to find ones that meet their specific needs. Two approaches are proving critical for improving accuracy:

-

Fine-Tuning – While developers enjoy having all the knobs to tweak, in practice, they focus on just 4-5 key adjustments. Fine-tuning is becoming less time-consuming as model providers simplify the process.

-

Retrieval-Augmented Generation (RAG) – The real difference lies in context and integrating external data sources to enhance responses.

Both methods require careful orchestration of data storage, retrieval, and testing. At Postman, we use these techniques with Postbot to deliver production-quality accuracy for API documentation and testing. While general-purpose models are powerful, real-world applications demand additional work.

Hallucinations remain a challenge—but they’re context-dependent. Creativity is a feature for some use cases; for others (e.g., financial analysis), it’s a dealbreaker.

Custom, domain-specific models

Beyond foundation models, teams are building custom models using proprietary datasets. As companies increasingly test and deploy AI applications across multiple clouds to optimize for performance, cost, and reliability, we see this as a key driver of multi-cloud adoption at scale. These domain-specific LLMs are often exposed through APIs, enabling seamless integration with broader software ecosystems.

In fact, AI-related traffic across the Postman platform has increased by nearly 73% in 2024 (2024 State of the API report).

NVIDIA’s NIM framework exemplifies this shift, offering AI microservices to simplify deployment.

In short, the proliferation of foundation and specialized models means one thing for developers: more APIs to choose from—and a lot more versions. Versions are emerging as the bigger pain point for developers, as they navigate multiple models driven by cost, performance, and accuracy. Developers are just beginning to explore this landscape, which means a lot more models and, consequently, a lot more APIs to choose from when building applications. This is one significant growth vector for API usage in the world.

However, AI models alone aren’t sufficient for solving real-world problems at scale and putting learning and best practices into action. This is where Agentic AI comes in, marking the next era of AI innovation.

What’s new: agentic AI

As the AI landscape matures beyond traditional LLMs, we’re seeing a shift from single-shot, text-only models to more sophisticated systems. These agents and multi-modal systems represent the next frontier of AI capabilities. Andrew Ng captures this evolution beautifully in his talk at the Snowflake Build Summit:

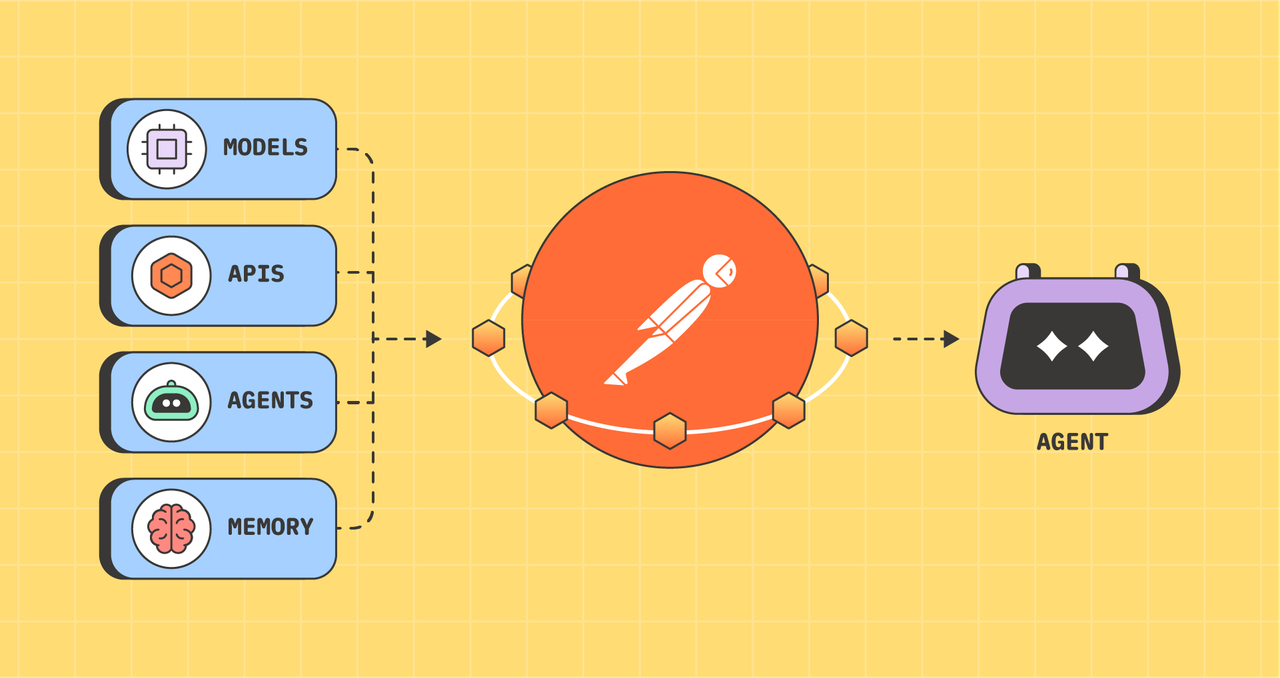

Agents combine the reasoning power of LLMs with tools (via APIs) to perform tasks in structured, multi-step workflows. They often feature multiple LLMs working together, alongside software systems, to achieve complex goals.

Andrew Ng outlines the key design components of agentic systems:

-

Reflection

-

Tool Use (API calls)

-

Planning

-

Multi-Agent Collaboration

Think of agents as analogous to early mobile apps. Initially, mobile apps were simple and standalone. But as they integrated with the cloud, their utility skyrocketed—transforming smartphones into essential tools for everyday life. Agentic AI holds a similar promise.

As agents gain traction, we could see a 10X–100X increase in API utility, enabling software systems to execute increasingly complex workflows. Today humans remain “in the loop”, but this will evolve where humans step out entirely depending on trust, and risk factors.

How to operate in the world of agents

1. Think in systems: define API boundaries for agentic AI systems

Our attention spans are still fixated on the model, but we need to graduate to thinking in terms of systems. Christopher Potts, a professor at Stanford, talks about this in his talk here.

Practically, developers need to shift their mindset from “using a model” to interacting with a complete system. This perspective change reveals that to move beyond simple question-and-answer interactions, the system must become more sophisticated—and APIs are the key to enabling this sophistication.

When we view an LLM’s input/output boundaries as part of a wider system, we naturally adopt an API-first approach. This broader perspective has crucial implications for product development, safety protocols, and meeting real-world user needs—going far beyond impressive demos that struggle in production.

As we move toward multi-agent collaboration and reasoning, mastering how to connect and orchestrate multiple LLMs through APIs becomes crucial. This systematic approach enables faster iteration while maintaining quality, allowing systems to scale and deliver real value. Consider that even a seemingly simple tool like ChatGPT operates as a complex, compound system.

2. Build reusable APIs for agents

Agents rely on external tools—a.k.a. APIs—to execute actions. Reusable APIs will be critical for enabling seamless agentic workflows.

A great example of this evolution is Anthropic’s Model Context Protocol, which provides a framework for how AI agents can interact with external systems in a structured way. Read more here.

Other companies are also making strides in this space for external use:

-

Stripe: Released APIs tailored for agentic workflows. Read more here.

-

Amazon Alexa: Smarter integrations via APIs for real-world use cases. Read more here.

3. Assess risk factors and API access

Agents are powerful but still unpredictable. Engineering teams need to assess risk and design APIs with appropriate guardrails. While APIs are traditionally designed for human operators, agentic systems introduce new usage patterns that require proactive consideration.

Documenting APIs—so agents can interact with them effectively—will be key to ensuring safe, efficient usage.

4. Build trust layers and guardrails

Building trust in agentic systems hinges on two critical elements: guardrails for the APIs you expose and safeguards for agent behavior.

First, your APIs—whether serving agents or non-agents—must be protected from misuse. This requires implementing robust layers of authentication, authorization, rate limiting, and abuse prevention.

Second, thorough testing of agentic systems—both individually and holistically—is crucial for ensuring they align with human-defined values and expectations. While initiatives like OpenAI’s Model Spec have started addressing alignment challenges, agents can still behave unpredictably. Engineering teams must implement comprehensive safeguards through rigorous testing, monitoring, and validation to prevent potential harm.

Remember that users hold production software to much higher standards than ChatGPT’s casual use for homework help. For production-ready agentic systems, rock-solid reliability, safety, and compliance at scale aren’t optional—they’re essential.

Conclusion

The rise of agentic AI marks a pivotal shift in how software systems will be built and operated. This wave will highlight a truth that many engineering leaders are just beginning to realize: the power to deliver AI solutions lies in their APIs.

At Postman, we’re excited about this future and remain focused on helping developers and customers thrive in an API-first world. Stay tuned—there’s a lot more to come!

What do you think about this topic? Tell us in a comment below.